On Human Nature(s) 3: Affordances, Heuristics and Biases, and Schemata

Marc Green

How System 1 Works

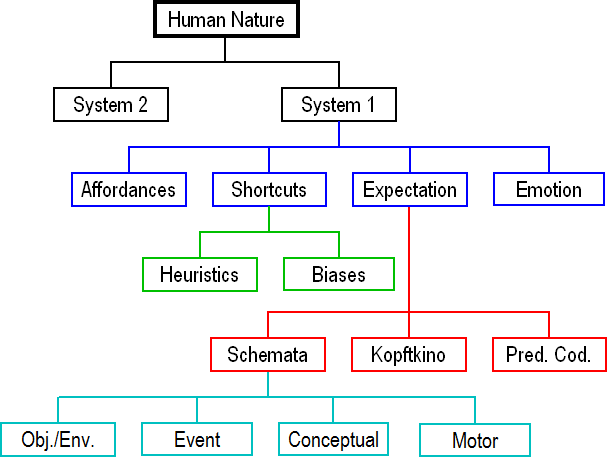

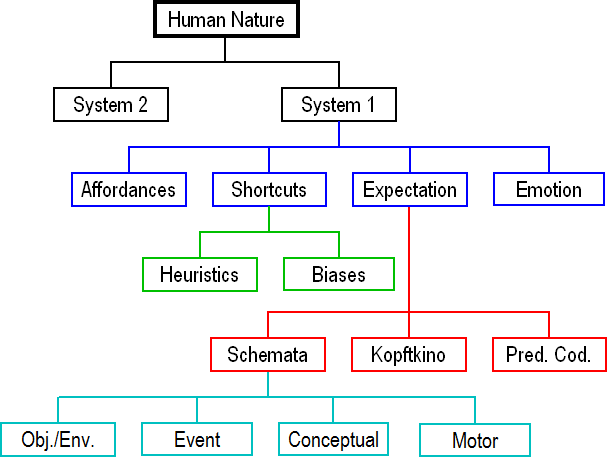

I’ve outlined what System 1 is but not how it works. System 1 is a collection of kluges to circumvent the constraints of our limited mental resources and to allow intuitive, automatic decision-making that guides our behavior. For didactic purposes, I’ve lumped these kluges into four general categories shown in Figure 1 below1, which might also be taken as a rough schematic of human mental architecture. The ensuing discussion follows the outline suggested by the branches.

Figure 1. Human nature divides into two main branches, each consisting of an assortment of different kludges with subkludges to circumvent human information-processing limitations. Only System 1 tree branches are discussed here.

- Affordances. In many cases, objects intuitively communicate suggested actions directly to the user;

- Decision “short-cuts,” heuristics and biases which are reasoning kludges that lower mental effort by reducing the amount of information required to reach a decision;

- Expectation generation. Humans typically don’t enter situations as blank slates. Rather, they have expectations derived from prior experience and learning. These expectations create assumptions that do not require confirmation, lowering mental workload. There are several expectation mechanisms including schemata, Kopfkino, and predictive sensory coding. I spend most time on schemata because these are probably to most commonly used kludges; and

- Emotion. Humans have inborn emotional processing that allows people to act much faster, and possibly safer, than when System 2 is too slow or when expectation generations fails. Emotions may be better regarded as System 0 because they are the most primitive, reptilian influence on decision and behavior.

Before starting, I make a few more disclaimers. The discussion below is only surface level, at least from a scientific standpoint, but it is sufficient to convey the key concepts. Further, these kludges are not all operating in any given situation. Some are more important depending on circumstance.

1. Affordances

When a viewer sees an object, he effortlessly perceives its sensory qualities, such as size, shape, and color. In addition, the viewer also sees what J. J. Gibson called its “affordances,” actions that the object enables. For example, a person who sees a park bench immediately knows that he may sit on it. Similarly, a person seeing a large rock knows that he can sit on that too. Both objects are sitting “affordances.”

In his clearest statement, Gibson said “The affordances of the environment are what it offers the animal, what it provides or furnishes, either for good or ill” (Gibson, 1979). He is not quite willing to say that affordance perceptions are innate, but suggests that they “are usually perceivable directly, without an excessive amount of learning” (Gibson, 1979). Thinking "ecologically," behavior is ultimately guided not just by what the viewer sees but also by what he can do. This is what Gibson meant by saying that perception is bound up with action and that the actor is tied to the environment. It is also why object classification based solely on sensory properties is problematic and often fails. Humans automatically classify objects by their affordances.

An important related concept is the “action boundary,” the dividing line between possible and impossible actions. Flat horizontal surfaces afford placing objects. A bookshelf affords the placing of objects on several surfaces (the shelves). If the bookshelf is tall, however, the viewer may not be able to reach the top shelf. He perceives that there is an action boundary, a border between the shelves that are reachable and those that are not. Likewise, drivers develop a sense of how fast and close they can safely come to other road users, their “field of safe travel” (Gibson and Crook, 1938) or their fault tolerances. Action boundaries sometimes require learning before they become automatic.

Interestingly, people are much better at perceiving such boundaries than in giving verbal estimates. In one study (Ayres, Bjelajac, Fowler, & Young, 1997), for example, ATV drivers were asked to estimate the slopes of hills. As in most slant perception studies, viewers wildly overestimated slopes. When then asked whether they could make it up the hill in their vehicles, an affordance judgment, their responses were highly accurate. This reflects the more general research and courtroom problem that humans are simply not very good at making quantitative judgments because our perceptual systems are designed to make relative rather than absolute judgments (Green, 2024).

Decision short-cuts: Heuristics, Biases and Satisficing

System 1 decisions are heavily influenced by “heuristics,” rules-of-thumb that produce good but not necessarily perfect results, and “biases,” preferences for one decision/response over another. A decision-maker is most likely to automatically use a heuristic and/or bias in two possible circumstances. One is where information is scarce, so a decision must be made on limited knowledge. In fact humans usually must act in "bounded rationality," because they cannot know everything. The second is when time is scarce, so the decision-maker trades off accuracy to gain speed. Obviously, a situation with both scarce information and scarce time is most likely to induce a heuristic or biased decision. Both short-cuts promote the desirable cognitive ease.

Various authors have proposed a wide variety of heuristics. For present purposes, I just list some of the most notable:

- The availability heuristic. Decide based on the information that most readily comes to mind. It may be the most important or task-relevant. Since humans prefer cognitive ease, it may be the information that is most easily processed by being simple and/or readily perceived. Last, it may depend on when the information was learned. The most recently learned causes “priming” (e.g., Tulving & Schacter, 1990), which strongly influences subsequent interpretations of what is seen and attended. The initial information learned may also be highly available. When learning information sequentially, the first and last information are least affected by forward and backward interference in memory resulting the well-known "primacy" and "recency" memory effects.

- The representative heuristic. Judge that two objects/individuals which share one property are likely to share others. If most gang members have guns and you encounter a gang member, then he will very likely have a gun. This affects the perceived signal probability as discussed below;

- The take-the-best heuristic. Instead of using a vector of features, decide based on the single most diagnostic feature. This is related to the “anchor and adjust” method that grounds decision-making on a single feature and then fine-tunes using others. For example, someone might like hot weather, so he might decide that he wants to live in the South, but then fine tune the decision by size or location of the exact city/state;

- The affect Heuristic. Choose based on the ‘‘goodness’’ or ‘‘badness’’ feeling invoked by an object or environment. The goodness or badness can be innate (snake) or learned (a company with lousy service.) The affect heuristic often unconsciously influences even seemingly analytical (System 2) decisions. The “buyers are liars” observation above is an example. The house evokes feelings that guide decision-making. In fact, it is very difficult to escape the affect heuristic because most objects and environments invoke an affect that can influence the decision.

“Bias” in cognitive psychology does not have the pejorative connotation that it has in common discourse. Instead, it is simply the tendency to decide in a given direction in uncertain circumstances. Again there are a wide variety of biases, but for present purposes, the most important are.

- Probability bias. The most probable interpretation is most likely to be true, producing behavior that is both more likely and faster. While this may seem obvious, determining what is most probable is not so simple. Probability is not necessarily a statistical concept. Humans tend to think causally, not statistically. Probability may reflect strength of belief arising from many sources, including causal reasoning, affect, and emotion.

- Confirmation bias. Seek out information that confirms pre-existing belief and ignore or discount contradictory evidence. It is one of the most powerful mental short-cuts that humans employ to simplify understanding of the world. However, it is well-known that it can also lead to disastrous decisions. More lives have probably been lost to people acting under the influence of confirmation bias than under the influence of alcohol. It might then be asked why such a strong bias has evolved if it can lead to disastrous results. One possibility is that it reinforces past success. If a belief previously led to a positive outcome, it is more likely to be lead to success in the future. Another is that it encourages faster action, since the decider does not spend time weighing pros and cons, becoming immobilized by indecision. Other sources (e.g., Peters, 2020) believe that its usefulness is not for individuals but for groups because it fosters coherence of group opinion and action. Moreover, confirmation bias likely receives its negative press due to a selection bias; people only point to confirmation bias when the outcome is negative and ignore the times when it leads to correct actions. All the other System 1 functions, especially emotion, suffer the same fate of being viewed negatively because only negative instances attract attention. In general, humans never question decisions that produce positive outcomes and view them as a normal background. Only negative events draw much scrutiny and criticism; and

- Response biases. While not often considered as decisions, humans often must make a selection among alternative responses. Humans have innate biases to make one physical response over another. Many fall under the classification of “stimulus-response” or “S-R” compatibilities. That is, some environment situations automatically bias response. For example, humans are more likely to respond faster to an object located on the left with the left hand and to an object on the right with the right hand. Conversely, if a threat come from left, humans will automatically attempt to avoid by moving in the opposite direction to the right and vice versa2. Other S-R compatibilities are better described as “mappings,” the relationship between the environmental layout and the array of possible responses. (See the the stove example in the page Human Error vs. Design Error). Broadly speaking, affordances could also be viewed as S-R compatibilities.

The discussion of responses biases can be extended by considering that there is often a conflict among possible responses. The study of response conflicts began with Lewin's (1935) psychological field theory that he derived from physics field theories of his day. According to this theory, humans live in a psychologically defined field and are attracted to objects of positive (+) valence and objects of negative (-) valence which they attempt to avoid. In the example above, an actor moves away from the threat (-) toward the direction of safety (+) if there is an escape route. There is no conflict when deciding upon response.

This seems obvious enough, but sometimes responses conflict. Ask a child whether he wants cake (+) or ice cream (+) for dinner, and the choice is between two positive valences, creating an “approach-approach” conflict. Deciders resolve such conflicts relatively quickly and easily. Conversely, there are “avoidance-avoidance” conflicts. Ask a child whether he would prefer spinach (−) or broccoli (−) for dinner, and the response is likely to be very slow in coming. Avoidance-avoidance conflicts are very stressful and difficult to resolve.

Response conflicts can also be “approach-avoidance” when a single object has both positive and negative valence. For example, someone may be attracted to a good-looking person (+) but fear rejection (-) if approached. Perhaps the most stressful and complex conflict in decision-making is the “double approach-avoidance” conflict where there are two objects with both plus and minus valence. A person may have to choose between two jobs, one is more satisfying (+) but pays less money (−) while the other pays very well (+) but is boring (−).

Double approach-avoidance situations are the most difficult to resolve and produce the slowest, and often no, response. I’ve investigated this in "underride" collisions where a driver suddenly sees a tractor trailer perpendicular blocking all lanes of traffic. What are his alternatives? There is not enough distance to brake. He can steer rightward, which escapes the collision by going to where the tractor-trailer is not located (+) but would cause him to go into a ditch (−), an approach-avoidance conflict. He can steer leftward to avoid the trailer (+) and into oncoming traffic (−), another approach-avoidance conflict. He is caught in a double approach-avoidance. In such situations drivers hesitate and vacillate. They often drive straight into the trailer without any response. In sum, actors may be biased to respond based on valence, but sometimes the valences conflict. I'll return to this in section 5.

Final Comments on Heuristics and Biases

Before leaving the topic of heuristics and biases, it's worth mentioning the controversy about their adaptiveness. I have suggested that they are kludges which evolved to help circumvent our limited mental resources in the face of a world full of information. This is not the universally accepted opinion. Kahneman and his many followers believe that heuristics and biases represent failure and irrationality in human decision-making. Perhaps the most prominent examples of supposed human “irrationality” are the "Linda Problem" and "base rate neglect." I won’t discuss the "Linda Problem" here as it would require too much digression, but many other authors have disputed Kahneman’s belief that it demonstrates human irrationality3. Instead, I'll just examine “base rate neglect,” where the decider apparently places insufficient weight on prior probability, at least according to Bayesian inference models. Many (e.g., Kahneman) say that the neglect creates error and irrationality because decisions then depart from a norm derived from a Bayesian statistical model of optimal choice. This conclusion is questionable. First, humans tend to properly reason by relying on the most specific information rather than simple probability. Second, in many, if not most, cases humans have no way of knowing the statistical prior probability anyway, especially in emergencies which are often singular events without precedent. (See below.) Third, humans trade probability off against payoffs. They may choose a low probability, high payoff outcome over a high probability, low payoff consequence. Fourth, as already explained, humans tend to think causally4 and that objects and events are not statistically independent but appear in patterns. This negative view also doesn’t explain why these mental short-cuts have evolved if they produce bad results. The only argument I’ve seen is that modern man makes qualitatively different decisions than our primitive ancestors and that heuristics and biases are relics of the past. Unfortunately, there are no primitive ancestors around to ask.

There is also the opposite view, best exemplified by Gigerenzer and "fast and frugal heuristics that make us smart." He suggests that heuristics and biases are a highly adaptive way to deal with the “bounded rationality” problem. Human decision-makers almost never have complete information, like base rates. Heuristics and biases allow for speedy, accurate decision-making on partial information. Unlike Kahneman’s view, there is no contradiction between their adaptiveness and their common usage. In fact, the ability of human reasoning to go beyond available information is one its great strengths. Norman (2005) summarizes this by saying:

People excel at perception, at creativity, at the ability to go beyond the information given, making sense of otherwise chaotic events. We often have to interpret events far beyond the information available, and our ability to do this efficiently and effortlessly even without being aware that we are doing so, greatly adds to our ability to function. This ability to put together a sensible, coherent image of the word in the face of limited evidence allows us to anticipate and predict events, the better to cope with an ambiguous and ever changing world.

Expectation Generation

Norman mentions that event prediction is a key ability in human reasoning. It plays a critical role in guiding action and being able to anticipate the outcomes of action. James (1890) likened prediction to “pre-perception” that enables action with minimal mental processing when a situation is encountered. Behavior is then much more efficient when based on perceptual predictions (LaBerge, 1995). The ensuing discussion here examines three kludges used in predictive reasoning, starting with schemata. The next section examines two other prediction kludges, Kopfkino and predictive coding.

While affordances, and heuristics and biases are either innate or develop very soon after birth, System 1 has another, bigger trick up its sleeve: learning from experience to develop expectations. It's been suggested by many psychologists and neuroscientists that the brain's primary function is to recognize recurring patterns, so experience is not just one thing after another, each requiring that the actor analyze every detail in depth. Instead, we store the past in organized memory structures usually called “schemata.” This drive toward finding patterns that can be stored as schemata is extremely powerful and difficult to turn off (e.g., Shermer, 2011).

In practice, when we encounter a situation, we automatically detect cues that invoke the relevant schema so that we don't have to analyze the world in detail. Since System 1 works holistically on the scene, the specific cues that invoke the schema may not be readily identifiable (see below). It is important to note that we have no conscious control over the schema that we invoke. Somewhere in our brain, something is saying "Oh, it's one of those again. I know what to do." We have expectations that we assume and don’t have to verify. This saves a lot of work. As noted previously, information can arise from only two sources. One is the light that enters the eyes (and other senses) and the other is information stored in memory. It is much more efficient and faster for information to be automatically supplied from an internal store as opposed to processing/interpreting new sensory information from the environment. In a sense, the internal store contains previously-experienced sensory information that has already been predigested, so it is faster and easier to use. This can lead to “recognition primed decision” (RPD) (Klein, 1993). The user sees perceives the situation and automatically decides and responds without analysis. It is like performing the parallel search as opposed to the serial search demonstration in the previous section.

Schemata come in several varieties, although the list might vary somewhat from source to source. However, most would include object/environment and event (“scripts”) schemata. Another important type is the “motor” schema.6.

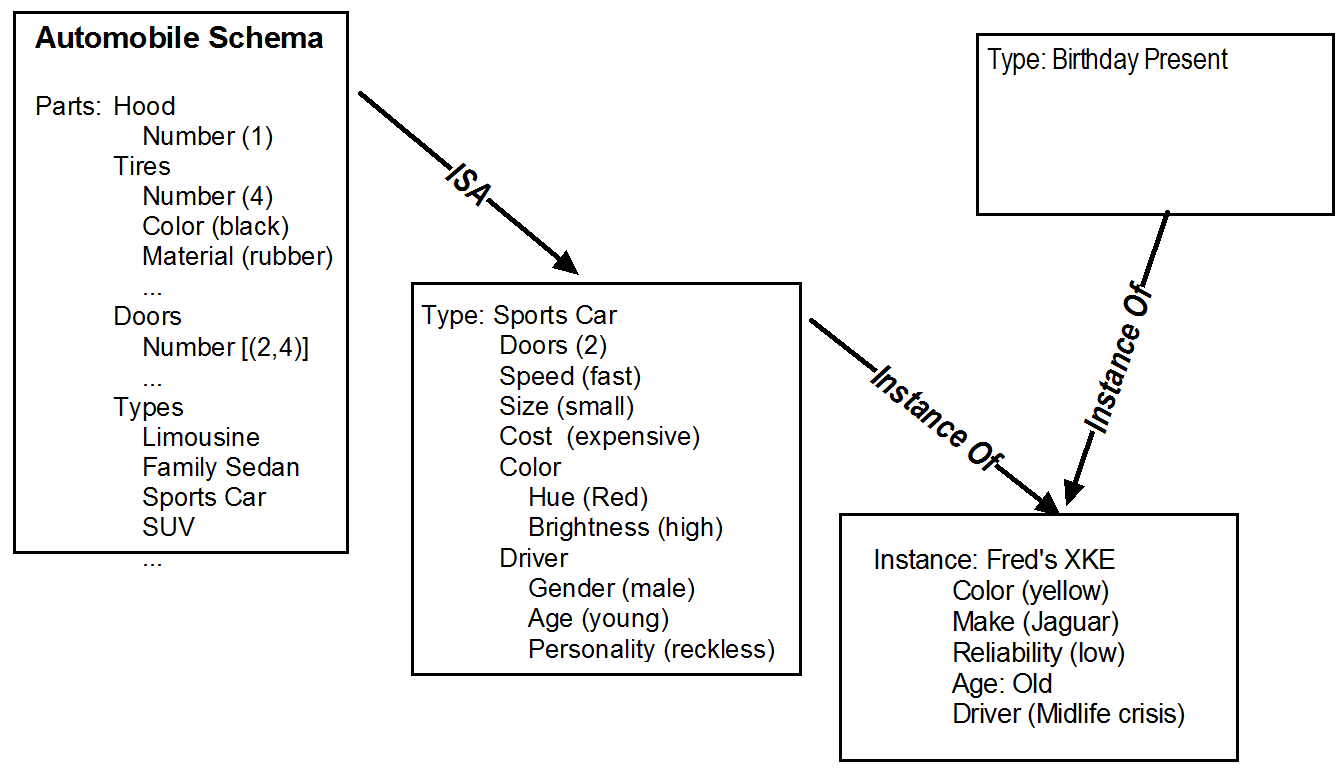

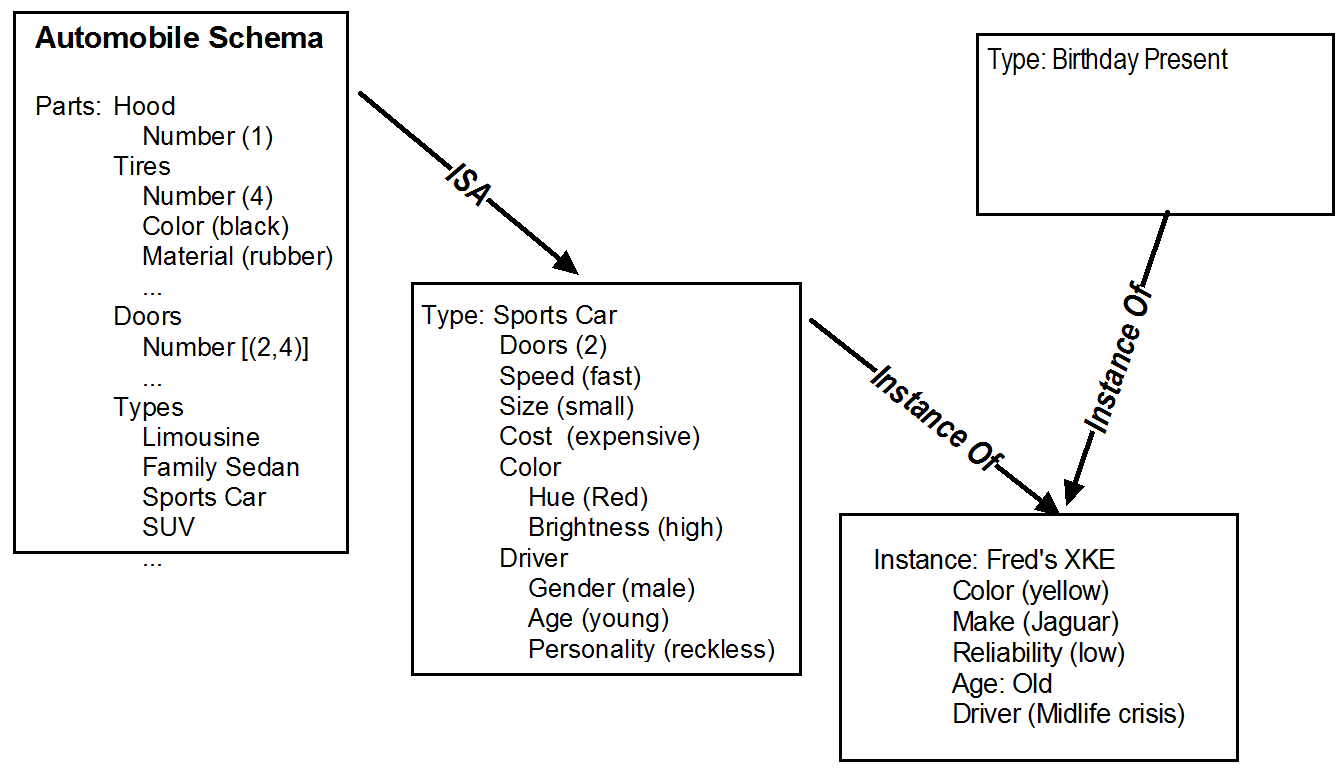

Object Schemata. Figure 2 shows an example of an object schema. Schemata are generally depicted in an O-A-V (object-attribute-value) representation called a “frame.”7 Figure 2 shows a highly stylized automobile schema, an object with attributes (“slots”) which have values such as color and number of tires. Values often have defaults (shown in parentheses). For example, the schema’s default value for the number of hoods is one. That is, cars are assumed (expected) to have one hood unless strong evidence says otherwise. In some cases, slots define a range of possibilities (shown in brackets) as in the case where cars can have two or four doors. There are other possible types of slots, such as the “procedural attachment” that specifies an algorithm for calculating a required value.

Figure 2. A schema has slots for O-A-V values that can be assumed without the need for object/scene analysis.

Schemata often form hierarchies. The subschema “sports car” inherits the values from the parent, with two exceptions. First, the child may add slots not present in the parent. For example, it has a slot for driver age, which presumably wasn’t an important attribute for cars in general. Second, it can override parental values, so the default color is now red. Being more specific than the parent, the child schema’s values take precedence. Again, humans prefer making decisions based on the information most specific to the situation.

Lastly, there can also be descriptions of specific examples that are related to a schema by an “Instance Of” link. In Figure 2, Fred’s XKE is a specific “instance of” the sports car schema. Instances also inherit the properties of its parent, grandparent, etc. Fred’s XKE is a sports car, so it is used for travel, has one hood, and four tires. It is driven by a young, reckless person and travels fast. However, the instance can add specific information (make: Jaguar) and override inherited values with specific information (color: yellow). Again, specific knowledge dominates over general knowledge.

Frames may have “multiple inheritance.” A schema or instance can receive contributions from more than one parent. Fred’s XKE could have been a birthday present, so it also inherits O-A-V slots from a birthday present schema. The frames are essentially nodes in an inheritance network.

For present purposes, the important point is that the schemata decrease the demand on our limited mental capacity. Once the viewer sees cues (which can be the scene holistically) that invoke a specific schema, the slots automatically fill with default the values. The viewer already “knows” much about the current situation, so there is no need to scrutinize every detail. Once the sports car schema is invoked, the viewer has expectations about its speed, size, driver age, and personality, etc. To be sure, the default values can be overturned by focusing attention to sensory evidence, but the viewer may not always attend the information because of limited time, complexity, or merely efficiency. Moreover, some attributes, such as “reckless personality,” cannot be directly observed and are merely assumed.

2. Event schemata or “scripts”: As the name implies, these are stereotyped sequences of events that create expectations about what will occur over time and what the expected behavior should be. For example, most people have a "restaurant script”: enter restaurant, be seated by a host/hostess, receive a menu, order food, eat food, pay, leave tip, and exit. Scripts may have entities such “actors,” other people in the script (e.g., waiters) and “props,” objects used in the script (e.g., menus). Scripts can have hierarchies, so there may be a “fast food script” that is a variant of the “sit-down" restaurant script above. It keeps some of the steps but removes or alters others.

The main point is that once the script is invoked, the diner’s actions are guided by knowledge of the script even if they have never been in that particular restaurant before. The script allows “mental time travel,” prediction and anticipation of what is going to occur and what to do without the need to think much about it. This reduces mental workload and also allows for faster reaction.

3. Conceptual schemata or “conceptual models": At the beginning of section 1, "Orientation," I explained that this set of pages is an attempt to transmit my conceptual model of human nature to the reader. By conceptual model I meant a system’s objects and their relationships as well as their limitation and constraints. I used a quote that described how accurate causal attribution depends on understanding the mechanism. In order to do this, it is necessary to have a conceptual schema of how the mechanism works. The driver only understands that a blown tire caused the boom and the pulling because he accurately understands that part of the car's operation. Likewise, it is only possible to determine causation in human-related events with a good conceptual model of human nature.

These conceptual models are similar to object and event schemata but go much deeper. Humans widely use conceptual models to understand technology but also to understand how other humans and social groups (the US government) as well as any other "system" that consists of multiple interacting parts, especially when the parts and their operations are not directly observable and must be inferred. The model may be explicit, where actor can articulate it, or implicit where it is held unconsciously.

In many cases, however, it is necessary to have only a working model and an, elaborate conceptual model is unnecessary. As Norman (1999) writes about conceptual schema in The Invisible Computer, "Precision, accuracy, and completeness of knowledge are seldom required." Most people successfully use smart phones and computers without an elaborate model of how they work, hence the human factors mantra that “the interface is the system.” The needed accuracy of the model depends on the user/investigator goals and the stakes at risk.

However, not all conceptual models are accurate or useful. I described how most people have a very flawed conceptual model, the "Homunculus Theory of Seeing," about how perception works. Similarly, humans typically have a flawed implicit conceptual model of human nature, which often becomes apparent when 20/20 hindsight and the fundamental attribution error rear their ugly heads. Much of this is due to starting with the prescriptive view of human nature and the use of Folk Psychology explanations.

For present purposes, the point is that by having a conceptual model, humans can respond rapidly with little or no thought. The driver who hears the bang and feels the pull can automatically respond. In short, conceptual models are another form of schema that act a kludges to avoid need for deep analysis. However, humans can use conceptual schema to solve problems in System 2 as well as System 1. They can reason through the object’s inner working to diagnose faults and to guide behavior.

4. Motor schemata. The cognitive schemata discussed above are memory structures for gaining situational awareness and guiding behavior in familiar circumstances. They provide a cognitive short-cut and often result in an automatic response, one made with little conscious control. There is a different type of schemata for automatically generating a response. These “motor schemata” (Schmidt, 1975), like their cognitive brethren, are built up through experience. They are pre-programmed so that once activated, they run off with little or no awareness or supervision. As with memory schemata, an individual perceives scene aspects which invoke the most appropriate motor schema (if one is available). Vehicle braking is a particularly good example. A driver traveling fast sees the car ahead suddenly stop. The driver lifts the foot off the accelerator, moves it laterally some fixed distance, and then depresses the brake pedal. All of this seemingly occurs automatically without any conscious planning. These behaviors are termed “open-loop” or “ballistic.” Like firing a gun, once triggered, they cannot be altered. They proceed with no feedback or correction. In fact, all System 1 kludges are poor at detecting errors.

Motor schemata have several virtues. First, they consume minimal mental resources. Compare a person who is learning to play the piano with an expert pianist. The novice must pay close attention to the keys and consciously control every finger press as he performs in System 2. If the expert is playing a highly overlearned piece, moving his fingers requires so little mental resource that he can simultaneously have a conversation or engage in some other secondary behavior. Virtually all skilled motor behavior requires strong motor schemata. Second, the purpose of training is often more than just teaching a skill; it is teaching a skill that is resistant to emotional arousal. Motor schemata are highly resistant to degradation under stressful conditions.

Last, motor schema often contain “chains.” These are series’ of movements that are “chained” together so that once triggered, they run off in a stereotyped sequence. The braking response was an example: 1) lift foot, 2) move right x inches, 3) depress brake pedal. Another good example is dialing a very familiar number on a phone. With experience, it just seems to happen with little or no guidance after initiation. Although the actor emits a sequence of several distinct, sequential responses, they can be treated as a single response, a single chain. However, chaining is especially salient when each response in the chain is similar, the phone number is 222-3333. I return to chaining at several junctures below.

In sum, schema theory suggests that human nature works like this: we encounter a novel situation, and we consciously attempt to analyze it so that we can find appropriate behavior (System 2). Over time, we learn to recognize perceptual patterns that allow the situation to be classified into a pre-stored mental template, i.e., a schema. Cognitive ease drives us toward performing the task with the need for less and less mental resource until we can do it without the need for any conscious thought—it’s automatic, saving mental load and increasing speed. Humans will generally try to convert tasks initially performed by System 2 to be governed by System 1. We all know that rules are for beginners. We all know that the best way to bring an organization to its knees is to “work to rule.” At every opportunity, humans will download tasks from controlled System 2 to the alternative System 1 which generates positive behavior automatically without conscious volition and decision. Dreyfus (2004) summarized System 1 processing by saying that when a person performs a learned task:

He or she does not solve problems. He or she does not even think. He or she just does what normally works and, of course, it normally works.

It is important to appreciate that humans invoke and act on schemata/scripts so quickly and automatically that they generally are not aware of doing it at all. This is not to say that human are robots. The physical behavior is still voluntary in the sense that it is directed by cognitive processes. It is just that the person has little or no awareness of the cognitive processes are operating. Humans tend to automatically assume that voluntary behavior and consciousness (intention) are linked only because we have no introspective access to voluntary but unconscious behavior. This distinction between consciousness and voluntariness of behavior is important in assessing causation and blame. For example, a police officer who mistakenly shoots an unarmed suspect is acting voluntarily, but his decision may have been based on unconscious decision factors, including mental short-cuts, schema, and emotions, operating outside of awareness and conscious control.

This Sounds Great. What Could Possibly Go Wrong?

As Dreyfus says, automatic behavior usually works. The key word is “usually.” While schemata can produce smooth, efficient, skilled, and accurate behavior, they are fallible and can lead to error in several types of circumstance. The invocation of a schema and the expectations they generate can go wrong several ways.

- The wrong schema is invoked because the actor used the wrong cue(s). The actor confidently performs the "strong but wrong" response. System 1 is not good at detecting errors;

- The familiar situation has changed in a non-obvious way. Schemas work on holistic recognition, so the viewer may not process a new detail. The schema and the assumptions and expectations that it creates are no longer functional. Again, the actor is likely to make a “strong but wrong” response;

- There may be multiple schemata that match the situation and the actor has to decide which one is the right one. This requires a “conflict resolution strategy” to decide. Usually the tie-breaker is specificity. Again, the actor may choose the wrong schema and make a wrong but (less) strong response.

- The individual might find that after searching memory there is no available schema that fits the current situation. At this point, the actor has two options: 1) fall back into an analytical System 2 mode and 2) continue searching memory for an applicable schema. If time is short, neither alternative may be possible. In some cases, the actor runs out of time and does nothing.

- The most problematic situation occurs when the actor discovers that the active schema is violated and there is no ready substitute. This typically leads to a moment of shock, confusion, and anxiety. Again, the actor may do nothing. If the stress level is high, as when under threat, System 0 (emotions) are likely to influence response (see below).

Here's an example of schema failure from personal experience. One night, I was driving in moderate traffic on the Skyline Freeway south of San Francisco. I came around a curve, looked ahead, and became confused. At first, I was sure that something was wrong, but I didn't know what. Suddenly, the icy finger fell on my spine. Then I realized that two headlamps ahead that were directly facing me. This couldn’t be right, could it? Until that moment, I was unaware that I had a freeway schema saying that I should never see headlamps facing me, only taillights. Cars all travel in the same direction. Right? But now my normal schema was shattered, and I had no ready substitute. I remember the thoughts that went through my mind as it raced to analyze the situation or to find an applicable schema. “Where am I? I’m driving. I’m on the Skyline freeway. There aren’t supposed to be headlamps facing me on a freeway. Are there? Why are there headlamps facing me? There shouldn’t be.” What was going on? At first, I desperately search memory for an applicable schema and finding none I attempted to perform a System 2 logical analysis. After perhaps a second or two (time is difficult to judge in such situations) of such thoughts and disorientation time ran out. I drove past the headlamps in the lane just to my left. I had made no response8.

This event highlights some key points about what can happen when schema guided behavior fails. First, my freeway driving was partially controlled by a schema that didn’t even realize I had. Second, I knew something was wrong, but could initially identify the problem. System 1's automatic schema-invocation mechanism looked at the scene holistically and found a mismatch with the active schema. There was a delay before I fell back to System 2 for analysis that homed in on the facing headlamps. This is not the only emergency I have experienced when I knew that something was wrong before I could identify the specific problem (see Green, 2024). Third, I experienced “fundamental surprise” (Lanir, 1986) when the current situation did not match the active schema9. The result was shock, confusion, and anxiety. Fourth, I made no response and am here to write these words merely due to simple luck. Kay (1971) has succinctly described such situations this way:

It is the case that in all accidents the individual has too much information to process in too little time... But the reason that there is too little time is nearly always because the individual has to switch from his highly organized, efficient pre-programmed mode [i.e., System 1 automatic schema] to his delayed S-R mode [System 2 analytical]. In order to adapt to the contingencies man has this unique ability to stop one programme and, if necessary, switch to another. But it is at the point of change where he is most vulnerable and where any initiation has the characteristic of the S-R mode. In the case of accidents the operator rarely has time to do more than try to stop the ongoing programmed skill and take the beginnings of avoiding action.

The failure of the active schema creates the opposite of cognitive ease, “cognitive strain.” This is the case where humans commonly err due to both lack of experience and heightened level of arousal. I can tell you that after 55 years of experience that there are only two great truths in psychology. One is Thorndike’s Law of Effect and the other is that the first time is always different. People do things that wouldn’t do otherwise and make errors that they wouldn’t otherwise make. This is often overlooked in mishap inquiries because the investigators act in hindsight and do not experience fundamental surprise. There is some irony in the typical mishap investigation; the behavior of someone acting in System 1 is being evaluated by someone who is acting in System 2.

In sum, this is the key point: while RPD and similar strategies reflect the normal behavior where the actor perceives the correct cues and automatically invokes the correct schema, most really bad events are singular occurrences that the actor has never experienced nor likely even practiced before. He has no schema. As far as the actor is concerned, it is a black swan event.

Next: More Kludges—Kopfkino, Predictive Coding, and Emotion

Endnotes

1It is also possible to decompose System 2 into mechanisms, e.g., hypothetical reasoning and cognitive decoupling (Evans & Stanovich, 2013).

2This is why drivers in skids tend to automatically turn the steering wheel in the opposite direction even though the optimal behavior is turning into the skid which is toward the direction of the slide. Like most S-R compatibilities, the bias can be overcome with training.

3See, for example, Gigerenzer, G. (1991). How to make cognitive illusions disappear: Beyond “heuristics and biases”. European Review of Social Psychology, 2(1), 83-115 and https://www.psychologytoday.com/ca/blog/the-superhuman-mind/201611/linda-the-bank-teller-case-revisited among many others.

4Although Oliver Wendell Holmes may have been more accurate by saying that "People think dramatically, not quantitatively."

5There are many other types of short-cuts not covered here or in most textbooks or discussions. For example, there is a whole class of short-cuts based on social interaction. We look to others for cues to appropriate behavior and decisions-making, i.e., "authority,” “unity,” “reciprocation,” “visual signaling,” etc. Moreover, many short-cuts are not commonly recognized as such. Use online reviews to decide on a purchase? That’s another social decision-making short-cut. Humans have a vast array of mechanisms designed to reduce mental workload.

6The classification of schema used here is purely didactic and serves present purposes. Others have more elaborate classifications, e., self schema, role schema, etc.

7The frame is not the schema but rather a notation for writing down the schema. There are others, most notably networks.

8 Then I drove over some debris lying in the roadway. A collision must have occurred just before I arrived. One of the vehicles presumably had spun 180°, so its headlamps were facing back to the oncoming traffic.

9The surprise can be felt in even relatively benign situations. Here's simple example. A few days ago, I came home, put the key in the lock and tried to turn it. It didn't work. I remember feeling a stab of confusion, and some shock. My normal schema failed. Being thrown back into System 2, I then remember that we had just changed the lock and I needed to use a new key.

.

.