Photographs vs. Reality*: Seeing Is Deceiving

Marc Green

One common application of photograph and other pictorial evidence is to show the court what a viewer could, would or should have seen at the time of an accident, crime or other event. This Photographic evidence often escapes proper criticism. Few courtroom viewers appreciate the technological limitations inherent in photographic reproduction. More importantly, no courtroom viewer will understand the nature of seeing, and that there is no way to dissociate the viewer from the image. As a result, no photograph image can accurately portray what the viewer would, could, or should have seen at the time of the original event.

Visibility and perceptibility are important issues in many court cases. In many accident and criminal cases, a viewer failed to see or properly perceive some critical object or piece of information. A driver failed to see another vehicle; a consumer failed to see a warning; a pedestrian failed to see a stairway, etc.

In such cases, the picture is intended to show jurors what a viewer could/should/would have seen at the time of an event. Presumably, the jurors can then directly assess whether there were sufficient visual information for the defendant to have acted reasonably. The picture "can be seen as testifying to the reality of the situation it records" (Messaris, 19997) and "provide the trier of fact with an opportunity to draw a relevant first hand sense impression (Graham, 1982).

This explains why pictorial evidence is so highly persuasive. Viewers typically believe that they are seeing reality, so the effect is immediate and direct. In contrast, a more scientific analysis based on research data and calculations is more abstract and less intuitive. Left unchallenged, pictures are likely to override even the most compelling and conclusive scientific evidence (Green, et al, 2008).

This is unfortunate because "All photographs of any kind are always distorted relative to reality,"(Evans, 1976), a fact suggesting the corollary that seeing may be believing, but it can also be deceiving. Despite their power and their limitations, pictures often evade the close scrutiny that verbal and scientific evidence receive (Dellinger, 1997). Courts carefully examine and critique witness testimony and other linguistic evidence, scientific calculations, etc., but proper critique of pictorial evidence requires knowledge that is relatively rare. Few understand the technical issues of photography and image processing and even fewer understand the subtle and often unintuitive perceptual issues. I am not just talking about judges, jurors and attorneys. Even most "experts" who create the pictures are largely unaware of the central issues, especially in perception.

This article provides an introduction to the limitations of pictorial evidence in representing reality. It further outlines the issues that might be used to challenge pictorial evidence. The limitations of photographic evidence fall roughly into four categories. Two, optics and photometrics, are technical physical factors that limit an image's resemblance to a natural scene. The other two, sensory and cognitive, are factors that affect viewer perception. The main point of the discussion is that pictures do not and cannot represent a reality which is independent of the viewer who sees them. As a result, jurors cannot see what the person involved in the accident or an eyewitness saw.

The discussion concerns photographic evidence generally but centers on images of nighttime roadway scenes. Visibility and conspicuity issues most frequently arise in accidents occurring at night. Moreover, low-light photographs and videotapes are typically the least reliable and most misleading.

The issues involved in physical image production can be complex, so I focus on "low dynamic range" (LDR) pictures that are created from a single camera shot. This is by far the most common type presented in court. The photographer has gone to the scene and snapped a series of shots (or perhaps videotape), each eventually converted into a different picture (or movie). I will say little about the much rarer, but more sophisticated, technique of "high dynamic range" (HDR) photography which uses a computer program to sew together a series of camera images of the same scene taken at different exposures into a single image. HDR is capable of producing much more realistic images than LDR, but it still has some of the same problems. At the end of this article, I return for a few words on HDR as well as on images that are entirely computer generated. Lastly, movies or animations are not covered here, but they are included in a more technical analysis elsewhere (Green, et al, 2008).

Overview Of The Problem

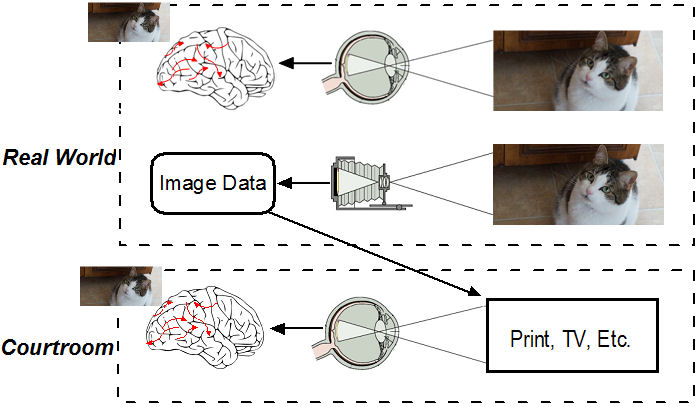

In an ideal world, jurors would receive the same images as those received by the original viewer. The steps necessary to create this ideal are shown in Figure 1. At the time of the accident, the viewer fixated a point in a scene, which then casts an image on to the retina, the eye's light-sensitive "film" that records the image. This retinal image is the only visual information available to the viewer, so all discussions of perception start here. As I will explain below, however, this is not what the viewer "sees." His perception is actually an interpretation of this image based on a variety of cognitive processes1.

Figure 1 Flow of images from natural scene to photographic evidence presented in court.

Suppose that a photographer wants to create a picture to show the jury how a scene appeared. The photographer would place the camera at the viewer's eye position and aim at the point in the natural scene where the viewer fixated. The shutter opens and the camera records the image on film in a traditional camera or on an array of "photosites," the digital camera's analog to the eye's photoreceptors.

The camera converts the light intensity and wavelengths into image data which are stored on the film or in digital memory. At a later time, some technology uses the image data to create a visual image, either a paper print or an electronic display. The juror views the display, which casts an image on to his retina. Ideally, this image on the juror's retina would be identical to the one that had been on the original viewer's retina2. This, however, is impossible to achieve because of technical limitations in ability of film and photosites to record light. Further distortions occur because of limitations in the display medium to show images (Green, et al, 2008).

Technically, an image is a two-dimensional array of points, each with a specific intensity and wavelength. The similarity between the natural and photographic images is called its "fidelity," which depends on two factors. First, the natural and photographic image should match optically. Objects in the image, such as a pedestrian in the roadway, should be the same size. Further, the relative sizes of and apparent distance between different objects, the "perspective," should also match. Another size factor is field-of-view, the overall area of the scene captured by the image. There are also other, less critical, optical factors such as depth-of-field, the area that is in focus. Depth-of-focus has a strong influence on conspicuity and perceived distance (Green, et al, 2008).

Second, the two images should match "photometrically," meaning that corresponding scene and picture image points should have the same light intensity and wavelength. The key photometric variable, however, is usually brightness (actually luminance) contrast, the difference between the light and dark areas of the image. This is what determines visibility and, to a lesser extent, conspicuity3. Color contrast is also important in some cases.

Currently, perfect image fidelity is unachievable. It is possible to produce varying degrees of fidelity depending on the sophistication of the photographer and the nature of the scene. The lowest level of photographic sophistication occurs when an untrained person simply takes an ordinary consumer camera and allows the automatic settings to determine exposure. A more sophisticated photographer might take several pictures at different exposures and then later chose the one which he considers to be the best.

However, image fidelity is not enough. The picture must still create the same retinal image as the natural scene. Retinal images are partly determined by additional variables that cannot be specified by the picture alone. These include, viewing distance, eye position relative to the image center of projection, picture dimensions, and the ambient light. The degree of match between picture's retinal image the scene's retinal image is "retinal fidelity".

The image fidelity and retinal fidelity issues are purely technical problems which, in theory, could someday be overcome by more advanced cameras and displays and by carefully determining viewing distances, etc. There is a third set of issues, "psychological fidelity," that can never be recreated. Even perfect image and retinal fidelity cannot specify many of the sensory, cognitive and emotional/arousal factors that determine perception. Some are purely sensory, i.e., viewing duration, visual field location, light adaptation and viewer age and visual impairment. Cognitive factors include experience, expectation and attention used by a concurrent task, such as driving. Emotional/arousal factors include stress, anxiety, fear, and circadian variations.

Moreover, pictures do not and cannot represent viewer perception. Perception is a conscious experience that depends as much on the contents of the viewer's mind as on the contents of the image. I will explain that viewer knowledge, expectation, goals and intentions are critical in evaluating the value of photographic evidence. Perhaps the biggest problem with photographic evidence is that it makes false and naive assumptions about what it means to "see."

Below, I critique photographic evidence in more detail, beginning with the factors limiting image fidelity. The subsequent discussion explains the perceptual issues that render photographic evidence incapable informing the jury about visual conditions.

Optical Factors

In visual science, size does not refer to the physical dimensions in feet or inches. This would not work for a simple reason: a 6 foot man at 500 feet creates a much smaller retinal image than the same 6 foot man at 100 feet.

To be useful, the size variable must be scaled by distance. The problem is solved by specifying size as "visual angle," which is approximately (size/distance.)4 This relationship allows specification of the proper picture size. Suppose a photographer wants to show the jury a picture of a six-foot tall pedestrian that the viewer saw at a distance of 150 feet. The visual angle to be represented is 6/150 which equals 0.04 radian. This converts to the more common unit of degrees as 2.29o.5

The same visual angle can be reproduced with a wide range of pictures sizes and viewing distances. Suppose that the pedestrian's height in the photograph is 1 inch. Jurors viewing the picture at a distance of 25 inches will receive the correct size retinal image because 1/25=0.04 radians=2.29o. If the juror moves away, then the image becomes too small. If the juror moves the picture closer, then the image becomes too big. Since 25 inches is longer than normal viewing distance, the jurors will likely receive too large an image. Of course, if the entire jury sees the same picture at the same time, then most or all will see the incorrect image size.

The second size consideration is relative size or "perspective." Everyone has seen extreme close-ups where foreground objects are much too large relative to those more distant. Proper perspective maintains the same relative sizes of foreground and background as seen by the original viewer. Unfortunately, perspective is often misunderstood and surrounded by misconceptions, most involving lens focal length.

Perspective depends only on viewpoint. The photographer must take the shot from the same eye position used by the viewer of the natural scene. This will ensure that the procedure for creating correct size for one object creates correct size and separation for all. If the camera is too close, the nearer objects become exaggerated relative to distant ones. The apparent separation of objects also changes. Conversely, if the camera is too far, distant objects become exaggerated relative to closer ones.

If the camera moves laterally, then the perspective as well as the shape of objects and their backgrounds also change. This is especially important because visibility and conspicuity are as much a function of background as of object. Change the background and possibly change the visibility and the conspicuity.

It is common to hear statements from a photographer claiming that his perspective is correct because he used a lens with some specific focal length, e .g., "I used a 50 mm lens because it captures what the eye sees," or that "it captures correct perspective." Such statements are meaningless.6 Focal length simply changes the overall magnification and field-of-view but has no effect on perspective.7

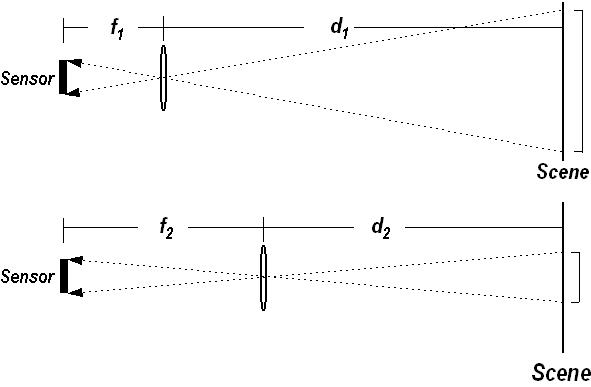

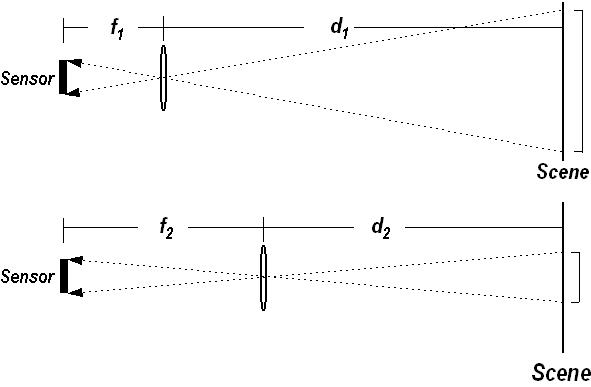

Figure 2 demonstrates why this is the case. The upper panel shows a scene projecting an image onto a sensor through a lens with focal

length f1. Below, it shows the same scene being projected through lens f2 that has a longer focal length.

The difference is clear - because lens f2 is farther from the sensor, it only allows the projection of a smaller visual

angle of the scene. The field-of-view is smaller. However, that smaller angle still fills the entire sensor so it is magnified relative

to f1.

Figure 2. Changing focal length alters size and magnification but not perspective.

Figure 3 demonstrates the effect. Picture A is the image from a zoom lens set to about 35mm focal length. Picture B shows the same scene shot with the same lens set to about 105mm, three times the focal length. The difference in field size is obvious. Picture C shows picture A, the 35mm image, blown up by a factor of three and cropped. It is virtually identical (except for a slight lateral change in camera position) to picture B, taken with a 105mm lens. Note that there is no perspective distortion of the nearby tree limbs compared to the distant house.

Figure 3 A scene photographed through 35 mm (A) and 105 mm (B) lenses. Panel C shows the picture A

magnified by a factor of three. There is no perspective distortion.

In sum, longer focal lengths shrink field-of-view and while magnifying individual objects. Shorter focal lengths do the reverse, increasing field-of-view but shrinking the contents.

Photographers are often caught amid conflicting demands. The eye's field-of-view is about 200o, far greater than most cameras can capture. The photographer may use a shorter focal length in order to create a bigger and more life-like field-of-view. This shrinks objects in the image. The resulting pictures must be greatly enlarged (or held very close to the eye) to achieve proper visual angle. However, enlarging pictures causes graininess and loss of detail. In sum, there are inherent tradeoffs among focal length, picture size, viewing distance, and image resolution. None of these tradeoffs affects perspective.

Many people confuse focal length with perspective because of these tradeoffs between object size and field-of-view size. If a photographer wants a wider, more life-like field-of-view, he must use a shorter focal length. This shrinks objects in the image. To compensate, the photograph often moves the camera forward in order to make the objects bigger. The perspective then changes, but it is the camera placement and not the lens that causes the effect.

Why is getting object size right so important? Visibility and conspicuity decline with small size but also with visual field location. For a driver on the roadway, an intersecting vehicle may first appear in the far periphery, where both resolution and attention are low. Now imagine a juror who is looking at the center of a photograph that has shrunk the scene to 8x10 inches. The car that was in the driver's far periphery would appear very near the juror's sightline and be highly noticeable if the picture is held too far away.

Unfortunately, even a correct sized picture would not solve a problem such as this. The juror would doubtless look directly at the intersecting vehicle because he already knows that is the primary object of interest. Once the juror has seen it, it is impossible to simulate what the driver could/would see. More on this below.

In closing, this discussion has several takeaway messages. First, correct size depends greatly on viewing conditions in the courtroom, most notably viewing distance and angle. Second, correct size is important in attempting to depict visibility and conspicuity. Third, there is no particular focal length that can "see what the eye sees" because retinal image size depends on both picture size and viewing distance. More importantly, cameras don't see anything. They record images. This may seem obvious, but it is a distinction that will become important later.

Photometric Factors

In an ideal world, the intensity and wavelength of each point in the picture would be the same as in the natural scene. This would create proper contrast, the difference in brightness between object and background. It is critical to portray contrast properly, since it is the basis for vision and determines visibility and affects conspicuity.8 Correct wavelength gives proper color, which can also be important in some situations, but generally brightness contrast is the key variable in nighttime scenes.

LDR has a very limited ability to capture contrast. Camera film/photosites cannot capture the full range of luminances contrasts (the "dynamic range") in many scenes and further distort the contrast they can capture Electronic displays and especially photographs generally produce further limitations and also various types of distortions (e g., "nonlinear gammas").

These distortions are technical and beyond the scope of this discussion (Green, et al, 2008), but their consequence are that they force camera manufacturers to build several assumptions into their cameras. The assumptions produce gross distortions when the scene is mostly white or mostly black, as at night. The most notable assumptions are built into the camera metering system. Camera light meters set exposure on the assumption that scenes are evenly illuminated and have a specific average scene reflectance, normally 18%.9 That is, they assume that most objects reflect about 18% of the light falling on them. Some cameras offer multiple ways to weight the exposure to emphasize different parts of the scene. As a result, a single camera can produce many different versions of the same photograph. Moreover, different cameras offer different weighting systems and hence produce different looking photographs.

The various exposure schemes produce passable results in normal daylight scene (if the contrasts are not too high and the objects not too small) but create major distortions at night, where the vast majority of the scene is very dark. The camera sees a mostly dark field sets exposure to accommodate this low light level. Even moderately bright areas, such as side marker lamps or reflective tape, are then drastically overexposed and have unrealistically high contrast. As a result, lights appear far more visible than they would have been to an actual viewer.

Moreover, cameras can set the exposure to last seconds while the eye summates light at most over a few tenths of a second. A photographer can take a dark scene and make it appear virtually any arbitrary brightness and can make objects appear at any arbitrary visibility.

The problem is magnified by the vast array of different metering modes. Most cameras allow the user to set a mode which weights various parts of the scene differently in determining the exposure. The same camera in different modes can produce vastly different pictures. Different cameras using different metering modes produce even more variable results.

Some photographers are partially aware of the limitations imposed by automatic metering. They then set the camera to manual and take a series of pictures at different exposures, hoping to get one which closely resembles the scene. This technique is better than using automatic metering, but still has its own problems. How are the pictures to be matched to the natural scene? Unless the photographer views the picture and the natural scene simultaneously, he must make the match in memory, which is a notoriously inaccurate method.

Even if viewed simultaneously, there can be no exact match because LDR pictures cannot capture all the scene contrast, regardless of exposure setting. High exposure photographs exaggerate brighter areas of the scene while lower exposures cause low contrast areas to turn completely black. A pedestrian in dark clothes might become even less visible.

At most, the viewer can specify the best match. But how good is the best match? Since the juror has never seen the natural image, he cannot assess the differences between the picture and the natural scene. He has no independent means for assessing the image fidelity. He cannot know whether the picture drastically exaggerates visibility. He has no choice but to accept the picture as reality.

Sensory Factors

So far, I have discussed the issues involved in creating high fidelity between a picture and a natural scene. However, there is much more to "seeing" than the image. The driver on a dark road at night is operating under very different sensory conditions than the juror in the courtroom. The driver may have a very brief viewing duration while the juror can inspect the picture at his leisure. The critical object may appear in the driver's peripheral field, which has lower contrast sensitivity while the juror can look directly at the object. A driver travels through a scene of constantly moving objects and loses part of his peripheral field. The juror sees only a static image. The driver's vision was also likely reduced by eye jiggle in the socket due to vibration from the vehicle. The juror is sitting in a rock steady chair.

Vision also depends on adaptation state. People see best when the eye is adapted to prevailing light level. Oftentimes, the driver has a high adaptation level due to glare of oncoming vehicles. He is then adapted at the wrong level to a pedestrian appearing from the darkness. Drivers are generally mis-adapted by looking at their own headlamp beams. The juror is adapted to the prevailing light level. He has time to look around the picture and adapt to whatever level is necessary for best visibility.

Adaptation clearly demonstrates that there is no objective reality and that vision is always relative to the viewer. Suppose you go from daylight into a movie theater. At first, everything appears black. After a while, the eye adapts and the theater appears much brighter. Which was the objective reality: the black theater or the bright theater? The answer, of course, is neither. There is no reality that is independent of the viewer's condition at that moment in time.

The adaptation example is relatively simple. As the next section explains, the viewer supplies much more than brightness adaptation. The viewer supplies attention, meaning and other cognitive input.

Cognitive Factors

Even if it were possible to create perfect image fidelity in the courtroom as shown in Figure 1, and to also simulate the sensory conditions of the roadway, the pictures still could not represent what the driver would or could see. The reason is that "Perception is largely determined not by physical characteristics but by the perceiver's intentions and goals" (Tejerina, 2003). This conclusion is confirmed by research into a wide variety of everyday tasks (e .g., Land, 2006). Seeing is inherently a function of knowledge, expectation, goals, and concurrent tasks. Since this is impossible to simulate in the courtroom juror's mind, the pictures are bound to misrepresent what the viewer would or even could have seen.

A simple example demonstrates why nighttime photographs are misleading. (I'll ignore the many image fidelity problems of nighttime photographs and consider only the viewer factors.) Suppose a driver failed to see a pedestrian at night and struck him with his vehicle. An attorney hires a photographer to take pictures at the accident scene recreation with an exemplar pedestrian. The attorney then shows the pictures to the jury with the intent of proving that the pedestrian (or truck, etc.) was highly visible and conspicuous.

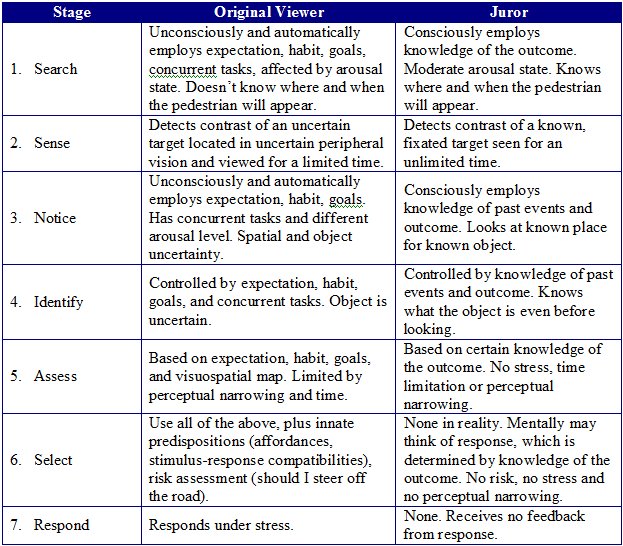

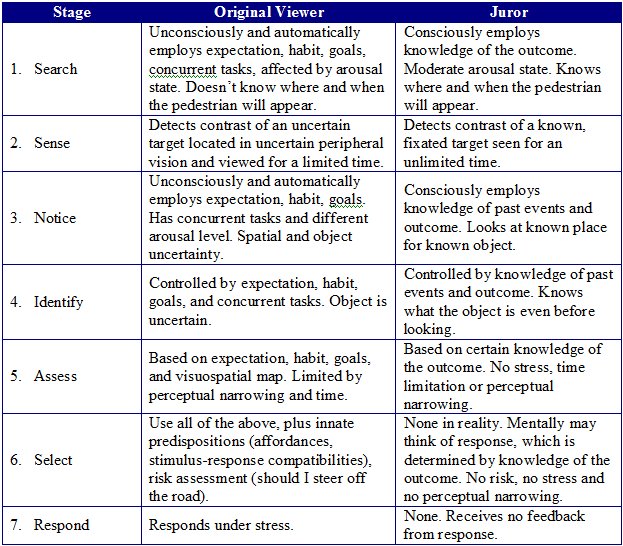

The table (Green, et al, 2008) shows the difference between the driver and juror viewing the scene. In order to avoid collision, the driver would have to perform a series of mental processes. He must search for an object at likely locations, sense the contrast, identify the contrast as a pedestrian, assess the current situation as requiring response, select a response and then finally respond. At each stage, he is operating much differently than the juror.

At every stage, the juror is free of the encumbrances of uncertainty, expectation, intention, and goal that shackle the driver. How can the jurors make a fair assessment of visibility and conspicuity? By its very definition, conspicuity is the attraction of attention to unexpected objects at unexpected locations. How can jurors assess the conspicuity of a known and expected object?

However, the problems run still deeper because the jurors (as well as just about everyone else) have many myths about and misunderstandings about seeing. They suffer "naive realism," the false belief that their vision is complete and objective. The juror will almost certainly ignore all scientific evidence about the limited image fidelity and about the sensory and cognitive differences between himself and the defendant and conclude, "If I can see the pedestrian, the driver should have been able to see him, too." Jurors will engage in extreme hindsight bias.

Some people who present pictures as evidence are well aware of the limitations discussed above, and do not claim that they represent what the driver would have seen. Rather, they say that they have performed a "visibility analysis" and explain that the pictures only show "what was there to be seen." This assertion is both misleading and disingenuous for many reasons.

I have already explained that seeing is inherently a psychological phenomenon and does not exist independently of the viewer. Just to review, first, the pictures cannot take into account the sensory factors of viewing duration, adaptation state, visual field location, etc.

Second, and more importantly, people do not see light, color and contrast as much as they see meaning. There is no conscious perception that is independent of expectation, beliefs and goals. Seeing isn't so much believing as believing is seeing. Jurors do not share these with the driver and, they do not understand that everything that they see is an interpretation. Jurors will believe that "Photographs and images on video are typically seen as direct copies of reality" (Messaris, 1997).

As a result, no amount of linguistic argument or scientific analysis will completely wipe away the misleading impressions that pictures can create. Once jurors see realistic-looking pictures they will implicitly believe that pictures represent what the original viewer perceived or should have perceived. If the pedestrian had emerged from the dark roadside in to the peripheral field of a driver who was adapted to his headlamp illumination, the juror can look directly correct point in the picture already knowing that the vague blob is a pedestrian. He looks for unlimited time through properly adapted eyes. The juror will automatically assume that this is what the driver could have seen. He does not understand that perception is not a conscious choice. The driver could not have seen what the juror sees. Lastly, jurors will unconsciously extrapolate beyond the misleading pictures and draw a host of errant inferences (Kanazawa, 1991).

High Dynamic Range Photography (HDR) And Simulation

HDR theoretically allows far superior images compared to LDR. By taking a series of photographs of different duration, a photographer can create image data with a much higher and more accurate range of color and contrasts. Although the technology has some photographic constraints (McCann, & Rizzi, 2007), the resulting images are primarily limited by the display devices, which cannot usually show all the data that the camera can capture. At the very least, the pictures must be shown on high quality electronic displays. The quality achievable with prints is far too poor for full rendering of most LDR images, let alone HDR images.

Use of HDR also introduces the issues of calibration inherent in the use of any scientific instrument. If I took readings with a light meter, I would have to prove that the meter was accurate. Similarly, the HDR technique relies heavily on correct camera exposure durations (and linearity), so these should be authenticated as accurate. Further, fidelity of the HDR images can only be evaluated by comparing light measurements of the natural scene with those of courtroom viewing conditions.

While HDR allows higher image fidelity, it still suffers from the same sensory and cognitive problems as LDR. Driver viewing duration, light adaptation, visual field location, expectation, attention, arousal, etc. still cannot be simulated. Moreover, HDR pictures can look so realistic that they will likely foster even greater hindsight bias than LDR images.

Last, some wishing to show the jurors what a viewer would/could have seen use computer graphic software rather than photography. The resulting images are still subject to the same questions that arise with photographic images. Moreover, low quality computer graphic images often fail to depict important visual cues such as texture gradient and shading. Of course, there is always the "danger of garbage in, garbage out;" that data used to generate the graphics must be reliable.

Conclusion

I have explained that pictures cannot be used to show jurors what a viewer would, could or should have seen, and have outlined some of the scientific grounds for challenging photographic evidence. There are problems in creating high fidelity images, especially in nighttime scenes and in presenting them in the courtroom. More importantly, the notion of "could/should" implies that the viewer has a free choice. He does not.10 He is constrained by both the sensory and cognitive factors that I have described. The phrase, "available to be seen," begs the question, "seen by whom under what conditions?" Seeing is always subject. There is no objective reality that can be transmitted by photographic images. Those who believe so, simply do not understand how perception works.

There are many technical problems in creating high image fidelity, especially in nighttime pictures. The optical problems are the easiest to solve. Correct camera placement creates proper perspective, although the resulting field size and image resolution may be compromised. In theory, creating correct object size in the courtroom is straightforward but may have some practical problems of maintaining correct viewing distance and angle. Photometric limitations also exist, especially in nighttime photographs where it is virtually impossible to create ideal exposure.

Even if these problems were overcome, the notion that a scene reality can be transmitted via pictures to jurors is wrong. Sensory factors of viewing duration, visual field location and light adaptation cannot be recreated in the picture. Seeing is inherently an interpretation based on knowledge, expectation, intention and goals.

Perhaps the underlying problem is simply that judges and jurors have no understanding of how seeing really works, so they cannot appreciate that what they are seeing may be very different from what the driver was seeing or even could see. Calling the pictures a "visibility study" changes nothing because once the juror sees the pictures, the harm is done. No amount of instruction, logic or reason can stop the jurors from interpreting and drawing their conclusions. We are visual creatures, the product of millions of years of visual evolution. In contrast, language is a relative recent evolutionary development. Our gut usually tells us to rely on images rather than on words.

References

Dellinger, H. (1997). Words are enough: the troublesome use of photographs, maps, and other images in Supreme Court opinions. Harvard Law Review, 110.n8, 1704-1753.

Evans, R. M. (1976). Using photography to preserve evidence. Kodak Publication M-2, 1976.

Graham, M. (1982). Evidence and Trial Advocacy Workshop: Relevancy and Exclusion of Relevant Evidence-Real Evidence, Criminal Law Bulletin, 18, 241 .

Green, M, Allen, M., Abrams, M. & Weintraub, L. (2008). Forensic Vision. Tucson: Lawyers & Judges Publishing.

Kanazawa, S. (1991). Seeing is believing - Or is it? Attacking video and computer reconstructions. Emerging Issues In Automobile Product Liability Litigation. American Bar Association Meeting, Tucson Arizona..

Land, M. (2006). Eye movements and the control of actions in everyday life. Progress in Retinal Eye Research, 25, 296-324.

McCann, J. and A. Rizzi (2007). Camera and visual veiling glare in HDR images", Journal of The Society For Information Display, 15, 721-730.

Messaris, P. (1997). Visual persuasion: The Role Of Images In Advertising. Thousand Oaks, Ca: Sage.

Norretranders, T. (1998). The User Illusion Cutting Consciousness Down to Size. New. York: Viking.

Tejerina, L. (2003). Committee report: conspicuity enhancement for police interceptor rear-end crash mitigation. https://www.fleet.ford.com/showroom/CVPI/pdfs/CVPI_Conspicuity_Report.pdf. Downloaded 1 Nov 06.

Endnotes

*This article is misnamed. It should be something like "Photographs Vs. Natural Viewing." The reason is that directly viewing the natural scene does not reveal reality, either. See here.

1It is easy show that viewers do not directly perceive objects or even retinal images. Once stimulated by the retinal image, the eye's rod and cone photoreceptors produce electrical activity that propagates through a series of intermediate cells to the brain where perception presumably occurs. The experience of perception is ultimately based on electrical activity in the brain and not directly on the image. There are no pictures in the head to perceive.

2I should say "images" rather than "image" because most viewers have two eyes and two retinal images. Viewers of natural scenes can use the difference between the images to perceive scene layout and surface orientation. This is impossible with pictures and constitutes a major deficiency in some situations. For more explanation, see Green, et al. (2008).

3Visibility is the ability sense an object. Conspicuity is the ability of the object to engage attention and to be consciously perceived. These are very different visual functions. Viewers often, or even generally, fail to notice even highly visibility objects.

4This is an approximation that only works for small angles. It is more common to use the approximation arctan(size/distance.) for larger angles.

5The conversion is degrees= radians*180/Pi.

6There are several explanations as to why 50 mm is the "standard" focal length. One is historical accident. When Oskar Barnack designed the first popular consumer cameras, a 50 mm lens fit his cameras best. Another explanation, is that the most common photographic print sizes, 3"x5" and 4"x 6", produce about the right visual angles when held at normal viewing distance.

7There are exceptions. Very short focal length lenses do create various artifacts, such a "barrel distortion."

8Visibility and conspicuity are distinct perceptual functions. However, contrast is one of many factors that determine conspicuity.

9There have been many explanations for the choice of 18%. Here's the correct one. The 18% number originates in a misunderstanding of an old Kodak study, which attempted to determine average reflectance for their automatic exposure cameras. They found that average scene reflectance was 12%. They recommended setting exposure a half stop higher than average, hence they used 18% reflectance cards as standard.

10This is the best summary of the issue: "We do not see what we sense. We see what we think we sense. Our consciousness is presented with an interpretation, not the raw data. Long after presentation, an unconscious information processing has discarded information, so that we see a simulation, a hypothesis, an interpretation; and we are not free to choose." (Norretranders, 1998).

.

.