Death By Uber 3: "Automation Complacency" Impairs "Drivers."

Marc Green

Overview: This page continues the discussion of 2018 Uber self‑driving car crash that killed pedestrian Elaine Herzberg. It focuses on perhaps the primary factor, “automation complacency,” the inevitable loss of attentional vigilance and slowing of response when humans passively monitor high-reliability systems. This phenomenon is well-documented, well-known, and discussed by the NTSB. Yet despite warnings from some employees, Uber exacerbated the problem by reducing the number of safety “drivers” per vehicle from two to one and made no attempt to compensate for automation's predictable effects.

The TPD reached their conclusions by relying solely on data and analyses derived from drivers of conventional vehicles. This is a fundamental error because many studies have shown that supervisors exhibit poorer performance than conventional drivers. The reduced performance is due to the inevitable, natural human response to tasks requiring the passively monitoring of a reliable, low event system. In a real sense, self-driving cars create the equivalent of "impaired drivers" but not because of any "driver" intent. Supervisors simply cannot be held to the same standards of vigilance and response time as normal drivers when the automation unexpectedly fails.

"Automation Complacency Impairs Drivers"

In contrast to the TPD, the NTSB report readily acknowledges this reality by frequently noting that one collision factor was "automation complacency"1 which occurs when a human passively monitors a highly reliable system for a length of time. The NTSB report cites evidence that automation complacency is found across a wide variety of domains where humans supervise automated systems. For example, the NTSB report says:

Automation performs remarkably well most of the time and therein lies the problem. Human attention span is limited, and we are notoriously poor monitors." (p 62)

When it comes to the human capacity to monitor an automation system for its failures, research findings are consistent-humans are very poor at this task" (p44)

The consequences of automation complacency are a loss of performance similar to that found in drivers impaired by other factors. The most commonly noted losses include "vigilance decrement," lower ability to notice changes to system state, and slower response times to intervene when the system fails. The ultimate cause is a combination of expectation and lower arousal and fatigue. If nothing ever has gone wrong, then humans begin to lose mental sharpness.

The effects of expectation are sometimes termed "familiarity blindness" which occurs when a human has experienced one state of the world and expects it to continue (Näätänen & Summala, 1976). For example, many studies find that drivers quickly fail to notice road information after a very short time or amount of experience traveling the same route (e.g., Martens & Fox 2007; Young, Mackenzie, Davies, & Crundall, 2018). Prolonged exposure also reduces the "useful field of view" (UFOV), the area of the visual field noticed at a glance (e.g., Rogé, Pébayle, Kiehn, & Muzet, 2002). A smaller UFOV means that the viewer is less likely to notice objects in the peripheral field, such as a pedestrian approaching from the side. This "checking out" routinely occurs in normal driving. Everyone has experienced "highway hypnosis" where you are diving and suddenly realize that you have traveled five, ten, or more miles without any conscious awareness of having controlled the vehicle2.

In these studies, the subject was the driver of a conventional vehicle. When the human is in an automated vehicle, the effects are magnified, doubtless due to low need to intervene (Greenlee, DeLucia, & Newton, 2022). There is a nearly universal finding that supervisor PRT increases with the amount of time that driving is automated (e.g., Funkhouser & Drews, 2016a,b) and that automated driving slows hazard detection (Greenlee, DeLucia, & Newton, 2018). Most studies only used level 2 automation. Automation complacency increases with automation level (e.g., Shen & Neyens, 2014) so it would be greater for supervisors of level 3 vehicles.

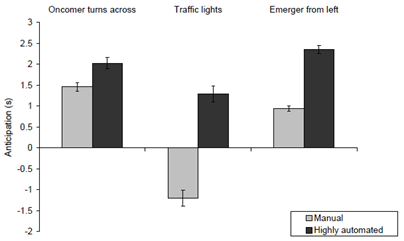

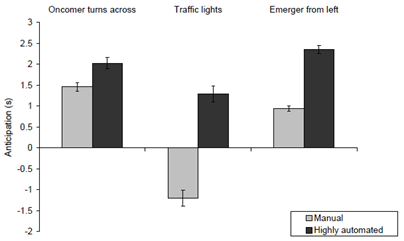

Figure 4 (Merat & Jamson, 2009) shows an example of automated driving's effect on PRT. It presents the difference in PRT from drivers of conventional vehicles and supervisors of "highly automated vehicles" (approximating level 3) in a simulator. In all conditions, the supervisor PRT was significantly longer. The most relevant condition is on the right. A vehicle appeared from the left three seconds before entering the automated vehicle's lane. The manual driver responded in about 0.9 seconds while the automated vehicle supervisor responded in about 2.4 seconds, a difference of 1.5 seconds. These absolute values would doubtless change with conditions, but they demonstrate that supervisors are different from drivers and are likely to have longer response times. It is also noteworthy that these data were obtained from a test session that lasted only 40 minutes long.

Figure 4 PRT for manual drivers and supervisors of highly automated vehicles (Merat & Jamson, 2009).

The supervisor's results are what might be expected from an impaired driver. However, it is impossible to directly compare automation

complacency and, say intoxication level, because there is no way to determine equivalent "dose."

Still, the PRT increase in supervisor qualitatively mimics what is found in highly intoxicated drivers. It is noteworthy that

the performances losses are readily found in research studies with driving sessions measured in minutes.

Moreover, some (e.g., De Winter, Stanton, & Eisma 2021) suggest

that research tests of vehicle automation's effects are simply not very realistic.

The drivers in the studies are subject to the same artificial elements as found in PRT research.

They know that they are being tested, so they are on high alert and on best behavior especially since a

co-pilot safety driver is in the vehicle. The impairment will doubtless be

much greater in normal real-world where drivers are traveling many hours and over many days.

There is nothing in the research literature that

comes close to the situation that the UATG created for Ms. Vasquez.

The inescapable conclusion that that if a self-driving vehicle fails to provide a TOR well in advance, the supervisor

is not likely to avoid the collision.

The conditions that create great automation complacency are well-known. As already mentioned, high levels of automation create greater complacency level (e.g. Shen & Neyens, 2014). It is strongest in systems where the supervisor intervenes the least, i.e., when the system is more reliable and when the supervisor is merely a passive observer. This effect was demonstrated in a study (Fu, Johns, Hyde, Sibi, Fischer, & Sirkin, 2020) of pedestrian detection in a simulated automated vehicle. The ADS detected whether pedestrians walking parallel to a road would cross in front of the vehicle. Supervisors were tested in two conditions. In the first set of trials, the ADS correctly detected most crossing pedestrians but failed to detect some. In this condition with misses, supervisor vigilance remained high, as evidenced by fast PRT, rapid deceleration and successful collision avoidance. In a second set of trials, the ADS correctly detected and braked for all pedestrians. In this condition, the ADS never failed to detect a crossing pedestrian. The condition ended with a trial where the ADS system failed. When this occurred, most supervised vehicles struck the pedestrian. Supervisors had learned to rely on the automation and lower their vigilance, so they failed to detect the ADS fault. Another study (Körber, Baseler, & Bengler, 2018) found a similar result: when the ADS seemed reliable and failed, the result was a collision. Such cases are 100 percent predictable from the vast literature on the vigilance decrement. Ms. Vasquez was a passive supervisor who had previously traversed exactly the same route 72 times, which the NTSB agrees was the reason that she was not attentive (see Part 4).

Time-on-job is another factor, but vigilance decrement can occur very rapidly. A common estimate is 25-30 minutes (Mackworth, 1968). However, one study by Missy Cummings, senior advisor for safety at the NHTSA, found that the average simulator driver traveling a dull four-lane road began "checking out" after 20 minutes while some drivers exhibited a loss after only eight minutes. In response to a question specifically about the effects of level 3 and 4 automation on driver alertness and ability to take control in collision avoidance, Cummings replied:"

I wrote a paper on boredom and autonomous systems, and I've come to the conclusion that it's pretty hopeless. I say that because humans are just wired for activity in the brain. (Martin, 2018)

This sentiment is echoed by virtually every researcher who studies the problem. As already noted, the NTSB, for example, concluded that "When it comes to the human capacity to monitor an automation system for its failures, research findings are consistent-humans are very poor at this task." The TPD report simply ignores any effect that expectation/automation complacency would have had on Ms. Vasquez even if she were looking down the road.

Conclusion

Those in and around human factors universally accept and understand the basic reality that humans are poor at being passive monitors of highly reliable processes, such as self-driving cars. Both the human lower vigilance due passive fatigue and boredom and the expectation of a fault-free state cause by familiarity blindness result in reduced likelihood of detection and in slower response-much like impaired drivers. Therefore, the TPD reported erred in basing their conclusions solely on data and norms derived from the behavior of aroused drivers in conventional vehicles. Without doubt, the expected behavior of Ms. Vasquez or any supervisor of a highly automated vehicle will be much worse than a corresponding driver in a conventional vehicle. The degree of loss depends on factors such as expectation and system reliability. After 72 uneventful runs on the same circuit, Ms. Vasquez's level of automation complacency would be very high and performance loss correspondingly great. Part 2 explained that the collision would not be avoided by many normal drivers. Adding the impairing effect of automation complacency to mix of low illumination and violated expectation further reduces the likelihood that the collision would be avoided.

However, the odds of collision avoidance, although very low, would doubtless have been higher if Ms. Vasquez had been looking at the road. But the question is not whether collision avoidance was humanly possible for a driver looking down the road but whether was humanly probable. The analysis described in Part 2 suggests that many normal drivers would have failed to avoid. Supervisors impaired by automation complacency would have been even less likely to avoid the collision. In short, failing to avoid even if looking ahead was "normal" behavior given the circumstances.

I have described the ADS supervisor's mental condition as being analogous to that of an impaired driver.

I have also explained that it was due to the collision of human nature with circumstances. Automation complacency is not do due to any intent of the supervisor,

which is an important factor in common sense blame allocation.

Since human nature is the least malleable part of a man-machine system, the best way to have avoided the collision

was to change the circumstances. This is one of the themes of the Part 4.

Looking into the future, young "drivers" learning on automated vehicles will never have the opportunity to develop the

skills and experience of today's drivers so they will be even less able to handle extraordinary circumstances when the automation fails-they will be eternal novices.

Even experienced driver will eventually have their skills atrophy. Many have the mistaken implicit view that the roadway system is inherently safe and that it is driver behavior creates the risk.

This view is nurtured by incorrect beliefs such as the notion that driver error causes 94 percent of accidents (see Part 4).

In fact, the situation is exactly the reverse.

It is human behavior that supplies the safety to an inherently dangerous system. It may be human nature to focus on the negative events such as fatal collisions, but considering the millions of drivers who travel billions of miles every year, the rate of serious mishaps is remarkably small. However, drivers of the future learning on self-driving cars will be less able to supply the safety when needed. There will be a race between the degradation of driver/supervisor ability to handle emergencies and the capabilities of the ADS.

Lastly, shouldn't Ms. Vasquez be at least held accountable for looking away from the road even if she couldn't have avoided the collision by looking ahead? For some, the question's answer might hang on the issue of "Was she was performing a job related task as she claimed or was she merely watching TV?" In examining the NTSB report, the next part explains why this is the wrong question to ask.

Endnotes

1 NTSB defined automation complacency following Prinzel (2002): "self-satisfaction that can result in non-vigilance based on an

unjustified assumption of satisfactory system state." The next part explains that this sort of explanation is no explanation at all. Note that this is a "folk psychology"

definition base on unobservable variables which have no explanatory power. I discuss this issue in Part 4 and in the page on inattention. For now, I'll just accept the

term as a label for the effects of boredom and low arousal created by the repeated monitoring of low event system.

2 This is possible because humans have two visual systems. The focal system that that identifies objects and their locations. It can operate consciously or unconsciously. However, locomotion tasks are handled by a second, ambient system that operates largely outside of awareness.

Next: Death by Uber 4: The NTSB Report

.

.