"The Grand Illusion": Assigning Blame In Failure To See

Marc Green

This article discusses how we see. The topic my not be on everyone's lips, but that does not make it unimportant. In fact, I shall explain that a knowledge of seeing is essential for understanding accidents and for assigning blame in court. It is going to be a rough ride for some people because the discussion introduces ideas that run contrary to intuition and to subjective experience. This is unavoidable because most peoples' intuitions about seeing are incorrect and because subjective experience masks the true nature of seeing by making it automatic and effortless. Moreover, a discussion of seeing delves into issues such as the nature of consciousness and of reality. Much of this may sound like some egghead philosophical naval-gazing. On the contrary, it is practical and absolutely essential for the fair and just assignment of blame in court, as well as in life.

Seeing is an important legal issue because courts frequently assess blame based on whether a viewer should have seen some object or piece of information. Unfortunately, most people, including judges, jurors, attorneys, and even most "experts," don't understand how seeing works, so they do not appreciate how various factors affect the ability to see. As a consequence, there are many tragic mis-assignments of blame on viewers who were simply acting normally, adaptively and even intelligently. I've seen so many mis-assignments of blame lately that I feel compelled to explain the basics of seeing. The starting point for correcting this situation is to recognize that:

| Basic Rule 1: You cannot determine whether a viewer is at fault for failing to see a pedestrian, or anything else, unless you understand how seeing works. |

Basic Rule 1 has great generality, i.e., you cannot fairly judge why a person fell down stairs unless you understand normal stair descent; you cannot fairly judge why a person failed to avoid a collision unless you understand how people normally avoid collisions; you cannot fairly judge why a person failed to comply with a warning unless you understand how people are normally process information into existing schemata, etc. Unfortunately, the courts seem overflowing with "experts" who give opinions despite an utter lack of background or knowledge about the way humans perform basic tasks like seeing, walking, thinking, remembering, etc.

Theory

Most people have never thought about how they see and have probably never thought that seeing even requires explanation. It just happens. At most, they have a vague intuitive notion that vision scientists call the "Homunculus theory." The eyes are like cameras that project an image on to an inner screen where a little man, the Homunculus, views it. There are many reasons why this scheme is wrong, most obviously that it begs the question of how the Homunculus sees the image. (Is there a second Homunculus inside the first, and third inside second, on and on into infinite regress?)

Moreover, most people suffer from "naïve realism", the false notion that seeing is a passive process where the eyes transmit a complete and objective reality directly to our consciousness. Naïve realism is naïve because seeing is active, selective and highly subjective.

Most people have never thought about how they see and have probably never thought that seeing even requires explanation. It just happens. At most, they have a vague intuitive notion that vision scientists call the "Homunculus theory." The eyes are like cameras that project an image on to an inner screen where a little man, the Homunculus, views it. There are many reasons why this scheme is wrong, most obviously that it begs the question of how the Homunculus sees the image. (Is there a second Homunculus inside the first, and third inside second, on and on into infinite regress?)

Moreover, most people suffer from "naïve realism", the false notion that seeing is a passive process where the eyes transmit a complete and objective reality directly to our consciousness. Naïve realism is naïve because seeing is active, selective and highly subjective.

Naïve realism has powerful consequences for blame assignment, which is why it is so important to understand its naïvité. For example, suppose that a driver strikes a pedestrian on a dark road. During a re-creation, an observer standing at the driver's viewpoint can see the pedestrian. The observer then goes to court and claims that the driver was at fault because he could have seen the pedestrian. The observer might even have taken photographs to bring into court as a means for demonstrating his conclusion's validity.

However, this conclusion is based on naïve realism. The observer assumes that seeing is an objective, complete, and passive process that just happens when a viewer looks at a scene. Said another way, the sole determinant of seeing is the light entering the viewer's eyes. This assumption is wrong and reflects a fundamental misunderstanding about the nature of seeing.

It is easy to understand why people make this assumption and don't understand seeing—it occurs automatically and without conscious awareness. We just look, and we see. There is little or no conscious thought or effort, so we must not be doing anything. I also run into the same problem when I testify about falls. It is highly unintuitive that the ability to remain upright and to walk is a complex task that requires an intricate sensorimotor integration process. Unfortunately, most fail to appreciate that nature has thankfully made most of our everyday survival skills (seeing, walking, etc.) occur effortlessly so that our limited conscious resources can be directed toward novel problems for which we have no hard-wired or automatic behaviors.

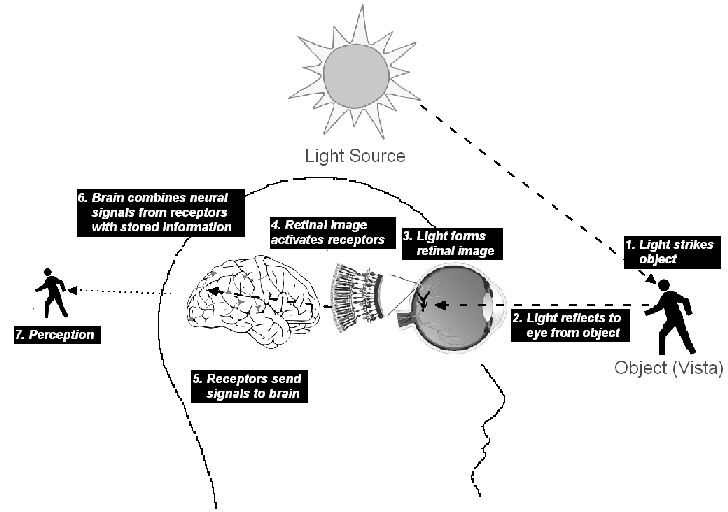

Seeing is best understood by examining how it constrains our knowledge of the world. Visual scientists typically divide the chain of events shown in the Figure into two components, sensation and perception. Stages 1-5 result in sensations, the raw material that cognition-stages 6-7-uses to create perception, the conscious experience of the world. Sensation is often viewed as a "bottom-up" passive process that just happens while perception is a "top-down" active process that imposes order and meaning on sensations.

1. The viewer may receive the electromagnetic energy by directly looking at the source, but in most cases, the source first illuminates a surface;

2. The surface reflects the energy to the eye. The array of projected scene surfaces is the "vista";

3. The electromagnetic energy projected from the vista passes through optical components to form an upside-down image on a light-sensitive layer, the retina, which is very roughly analogous to the film or photosite layer in a camera. Two constraints are already apparent. First, the retina's size is small, so that it can only capture an image from a restricted area of the vista. Second, the retinal image is two-dimensional (2-D), but the world is presumably three-dimensional (3-D). The projection loses depth, distance along the Z-axis. As explained later, the lack of depth further results in loss of information about size, speed, and shape. These are key limitations to vision and to the ability to avoid collisions. If a driver could not judge depth because of the 2-D retina, how could he determine when another vehicle is approaching him and that a collision is imminent?;

4. The next constraint occurs at the retina's "photoreceptors," cells that convert the electromagnetic energy into electrical impulses through the chemical process of transduction. The photoreceptors contain pigments that change shape ("isomerize") and release energy when struck by photons. However, the photoreceptors can only transduce a very small band of the electromagnetic spectrum, about 370 to 730 nm (nm=10-9 meter). The range of electromagnetic wavelengths falling in this "visible spectrum" is "light." Frequencies below 370 nm are in the "ultraviolet," while frequencies above 730 nm are in the "infrared."

Why this particular band? The answer is evolution, a slow form of adaptation to the environment. The visible spectrum is presumably tuned to the aspects of the environment that are most critical for survival. For example, the eye's peak spectral sensitivity is at a wavelength that roughly corresponds to green, the predominant color of plants, a major food supply. The limits to the visible spectrum are also probably no accidents. Humans can't see into the ultraviolet wavelengths, where light scatter is so great that perception would require looking through a perpetual blue-violet haze. Even in the short wavelength end of the visible spectrum, the problem is sufficiently great that we need the lens and cornea to be yellow so that they can block much of the short wave scatter. Further, humans can't see into the infrared where diffraction is so great that we would need very large pupils to prevent interference fringes. Wide pupils would result in poorer retinal image sharpness and smaller depth-of-field. The visible spectrum is a Goldilocks zone in the electromagnetic spectrum, at least for our species.

Another constraint is the uneven photoreceptor distribution in the retina. The photoreceptors provide only samples of the continuous retinal image. They are packed tightly in a regular mosaic in the center, so the sampling rate is high allowing for fine resolution. With greater eccentricity, their spacing increases, so the sampling rate falls. Less detail is encoded, but less mental processing is needed. Further, the mosaic becomes increasingly irregular. This reduces aliasing, a type of distortion that occurs in systems with low sampling rates. However, it causes another kind of distortion, "crowding," where contours are jumbled together. This crowding is possibly peripheral vision's major constraint in daylight;

5. The electrical impulses propagate through a network of other nerve cells in the retina before traveling up the optic nerves and optic radiations along the primary visual pathway to the brain. The primary visual brain area, V1, is right below the "inion," the bump on the back of the head.

During this transmission, the nerve impulses are altered and processed through a series of "receptive fields," whose responses are limited by the next constraint, the "spatiotemporal window" (e.g., Green, 1992). Like all information transmitting systems, the visual system has a limited bandwidth. We can only see spatial and temporal information that is transmitted within a frequency band bounded at 60 cycles/degree in space and 60-100 Hz. in time under ideal conditions. The window is not flat, as it has a spatial peak at about three cycles/degree and a temporal peak at about 2-3 Hz. However, the window shrinks and peak locations change due to many circumstances, such as low contrast, dim lighting, small size, and retinal eccentricity. The impulses then spread from primary visual cortex to other brain areas, most notably through a dorsal/ambient/where stream and a ventral/focal/what stream. In a real sense, the brain has two distinct visual systems that operate in parallel and serve different functions;

6. The sensory input combines with impulses generated by other information already stored in the brain. Psychological processes begin to occur. One is the selection of a subset of the sensory input for deeper processing. The selection produces perhaps the most important (or at least most obvious) human information processing limitation, the attentional bottleneck. The need to expand the attentional bottleneck drives much of our cognitive architecture. Fortunately, we have a large number of adaptations to loosen the constraint. These include attentional mechanisms such as expectation and automaticity, decision mechanisms such as heuristics, biases, and satisficing, and even an entire visual pathway that allows us to guide locomotion with a minimal drain on focal attention and consciousness; and

7. Seeing occurs. That is, there is conscious perception, the attachment of meaning to the sensations that have been created from the raw material of the visible spectrum projected onto the 2-D retina pointed in a particular direction after being filtered through the photoreceptor array, the spatiotemporal window, and the attentional bottleneck.

Lastly, limited sensory and mental capacities are not the only constraints on human behavior and survival. The other constraint is time. Humans cannot respond to what they see instantaneously. There is always a time lag from perception to action. Both mental and motor latencies force humans to spend their lives playing catch-up to changes in the environment. Late detection (Rumar, 1990), meaning lack of time to respond, is perhaps the major cause of collisions. Wouldn't it be nice if humans could see into the future? Then there would be more time to respond. Fortunately, vision gives humans the ability of "mental time travel" (e.g., Suddendorf & Corballis, 1997) that integrates the past and present to predict the future. The prediction comes from two sources, one sensory and the other cognitive. The sensory source is motion extrapolation. Viewers use the speed and trajectory of other objects to predict their future locations. When a driver looks down the road, moreover, he is peering into the distance, but also into the future of where he will be and what he will encounter in the next few seconds.

Now stop and think about this process. First, notice that we never see the world directly. Instead, we only directly see the image that it casts on the retina. This is why all visual analysis should start with the retinal image. Second, notice that we don't even see the retinal image, which immediately converted to nerve impulses conducted to the brain. There are no pictures in the head and no inner viewing screen—just nerve impulses. The Homunculus, even if he existed, would be useless.

So what do we actually see? The answer is highly unintuitive but inescapable: we see something that the brain creates out of a subset of the electrical signals from the retinal image and from stored knowledge. This something has a rough correlation to some parts of the physical world, but it is not the physical world. The best example is color, which is not a property of light but which instead is purely a construction of the brain. Admittedly, nobody has the faintest clue as to how the brain actually turns these electrical impulses into conscious perception, which is an emergent property of the brain. But make no mistake that when we see, we are seeing something created by a pattern of electrical impulses and not directly the world or even the retinal image. Perception experts often refer to this world that we consciously perceive as "The Grand Illusion."

The explanation of perception outlined above begs the question: "Does that mean the universe that physics presents to us in textbooks is not the "real world?" The answer is "No it isn't" This is not just my opinion or the opinion of many prominent physicists. Niels Bohr agreed and said "It is wrong to think that the task of physics is to find our how Nature is. Physics concerns what we say about nature." The same sentiment has been stated more directly is the PBS Space-Time series as "The mathematics of physics don't govern reality. They are not even direct models of reality. Rather, the laws of physics are models of our experience of reality" (PBS Space-Time video https://invidious.protokolla.fi/watch?v=v-aP1J-BdvE). In Religion and Science, Bertrand Russell similarly noted "Physics may be regarded as predicting what we shall see in certain circumstances and in this sense, it is a branch of psychology since our seeing is a mental event. This point of view has come to the fore in modern physics through the desire to make only assertions that are empirically verifiable. Combined with the fact that a verification is always an observation by some human being and therefore an occurrence such as psychology considers." This led Russell to conclude that physics should be regarded as a subdiscipline of psychology.

Of course, there is a natural resistance to believing that we see is an illusion and not the real world. This is hardly surprising because our visual systems are designed to create this illusion. One major design goal is the illusion of stability. A comparison of our perceptions with readings from light meters shows that there is only a weak correspondence between the two. Fortunately, our visual systems trick us into believing that we act in a stable world with objects that have fixed properties out there in 3-D space. Where meter readings signal large, chaotic changes, the perceptual system reveals only constancy. For example, a piece of coal in the bright sun and in a dark room looks black, despite huge changes in the light that is reflected to the eye. A piece of coal in the bright sun reflects more light to the eye than a piece of white paper in a dim room. Yet, the coal always looks black and the paper always looks white, as if blackness and whiteness were fixed properties of the objects. Our perceptual system do not merely fail to show "objective reality"; it actively works to disguise it.

Another reason for the resistance is that visual experience is normally so fast, seamless, and effortless that we have the illusion of objectively seeing the world directly without intermediate processing. Moreover, most perceptions are "cognitively impenetrable" (Pylyshyn, 1999). We cannot introspect and change them because they occur automatically and without volition or awareness. Why does grass look green? It just does, and we have no choice. Cognitive impenetrability also gives us the illusion that we see the world directly and that nothing is happening between the ears. It explains why most people never think about vision. Of course, this is usually a good thing. People would be overwhelmed and immobilized if normal perception required a series of conscious decisions (James, 1890).

Hoffman (2018) suggests that the perceptual system is analogous to the interface on a computer. It is a layer between you and the real computer with its world of 0s and 1s. The user sees a screen with icons, menus, buttons, etc. to click, not the real workings of the computer. There are no icons, menus, or buttons inside the computer. The interface simplifies the computer and makes it usable by masking the reality so there is no need to have a direct knowledge of what is going on inside. (Having spent many years programming machine and assembly language, I can assure you that you don't want to deal with what's going on inside.) Similarly, our perceptions of objects with fixed properties and 3-D space is simply an interface to reality. It is not reality but rather has a useful relationship to reality.

A few simple observations demonstrate that we do not see an objective, fixed reality.

- You don't need "reality," retinal images, or even light to see. You can push on the eye or hit yourself in the head to have visual sensations. You can close your eyes and mentally "see" pictures. You can even "see" objects that are entirely creations of you mind, for example, a purple and magenta elephant with 9 tusks or even invent completely new animals, if you like.

- The world appears three-dimensional, yet the retinal image is only two-dimensional. Where does the extra dimension come from? The answer is that our brains must create it because it certainly isn't in the 2D retinal image1 (unless you are a strict Gibsonian). No stereopsis is not what gives us depth. Close one eye and the world still appears 3-D. Look at a photographic and it appears 3-D.

- "Reality" changes right before your eyes on many occasions. You walk into a movie theater during the day. It is very black and you can see little. After a while, your eyes adapt, the scene looks brighter and you can see much that was previously invisible. Which is the "real" world—the dark theater or the bright theater? The answer is obviously neither. Your brain created both.

- Each eye has a blindspot, an area of retina where the optic nerve exits and there are no photoreceptors. You should see nothing, but you never experience this hole2. The effect occurs in eye diseases that can create similar and much larger holes. The brain fills it in and creates a continuous stable and illusory world.

- People with different wiring have different perceptions. For example, "color blind" people missing middle wavelength photoreceptors do not see red or green. Since they see different colors than "normals," their red-green blindness is viewed as a deficiency, a departure from the norm. Suppose all humans were missing middle wavelength photoreceptors. Then no one in our species would see red or green, so it would be normal and the colors red and green would not exist in "reality." Perception depends on wiring, not reality. This is why lights that are physically very different (e.g., "yellow" and "red"+"green" metamers) can appear identical. It is the neural wiring and not just the physical reality that determines perception. ) can appear identical. It is the neural wiring and not just the physical reality that determines perception. Moreover, color does not exist independently of the human brain. Color is not a physical property of light. The viewer creates it in the mind.

| Basic Rule 2: Conscious perception is the result of two factors, the light that enters our eyes and the stored memories and knowledge that we carry around in our heads as mental models, schemas, and scripts. |

Helmoltz's Rule elaborates Basic Rule 2 by saying that viewers will consciously see the interpretation that they unconsciously deem the most likely, given the constraints created by the light. At most, the light constrains likely perceptions but does not determine them.

Basic Rule 2 also summarizes the common understanding of the many scientists who have been studying seeing over the last few hundred years. Various authors may use different terminology, "sensory vs. cognitive," "sensory vs. nonsensory," "knowledge in the head vs. knowledge in the world" or "bottom-up" vs. "top-down", but all agree on the basic notion that seeing employs two sources of information one from inside and one from outside, plus some unconscious reasoning process to combine them. For example:

We see our world of organized scenes and moving objects by combining information from the light which reaches our eyes with knowledge stored in memory about the structure and identity of objects and scenes. We understand our surroundings partly by seeing them but also by inferring what is there. Vision is therefore a genuine cognitive function, not merely a sensory one." - Hampson and Morris (1996);

Vision is not merely a matter of passive perception, it is an intelligent process of active construction. What you see is, invariably, what your visual intelligence constructs. Just as scientists intelligently construct useful theories based on experimental evidence, so your visual system intelligently constructs useful visual worlds based on images at the eyes. The main difference is that the constructions of scientists are done consciously, but those of your visual intelligence are done, for the most part, unconsciously." -Hoffman (1998);

We do not see what we sense. We see what we think we sense. Our consciousness is presented with an interpretation, not the raw data. Long after presentation, an unconscious information processing has discarded information, so that we see a simulation, a hypothesis, an interpretation; and we are not free to choose." -Norretranders (1998).

I am especially fond of Norretranders's summary since it captures all the essentials: We do not see the real world. We do not see the object or even the retinal image. We see an interpretation. And we have no choice. I realize that this is hard to accept, but it is the reality of seeing, at least as best we can ever hope to understand reality. (The more philosophically inclined reader may wish to consult Immanuel Kant for his views on the impossibility of knowing reality.)

Now, I know what you are thinking. People also resist the Grand Illusion because it runs contrary to everyday social experience. If we do not perceive an objective reality and if we construct our own perceptions, then why do viewers generally agree about what they are seeing? This question has several answers.

First, we are all the members of the same species with essentially the same sensory neural wiring (but see dichromats and monochromats). Naturally, a high level of agreement occurs. Our wiring is similar enough that we typically share basic experiences such as brightness and color, although these too are created in the head.

Second, we all likely share similar cognitive neural wiring, although this varies more across the population.

Third, we all live in the same environments, have similar experiences and perform similar tasks with similar goals, so we develop similar understandings of the world and sensory input.

Fourth, when we compare perceptions, social convention smooths out differences. Perceptions are private, internal experiences that cannot be directly compared among people. Instead, we make the comparisons using language, which relies heavily on social conventions. Even red-green colorblind people often describe an apple as being red.

Fifth, the disagreement among viewer perceptions is far greater than people often suppose. In most cases, we don't notice the discrepancies because they have no important consequences. When they do, as in court testimony, then startling differences among viewers are commonly revealed. The need for traffic control devices is further evidence that individual perceptions vary. They exist to impose a standard to override the variability in driver perceptions (Rumar, 1982).

Sixth, while we see an illusion, it is not an arbitrary illusion. It is a representation that has some correspondence to reality in ways that have survival value. Perhaps the main reason that people resist accepting the Grand Illusion is that it works so well

Application

Now let's go back to the example where the observer goes to the accident re-creation, sees the pedestrian and concludes that a normal, "attentive" driver should have seen the pedestrian. This statement is based on a faulty understanding of seeing#8212;it is assuming that perception rests 100% on the light entering the eye and that's all there is to seeing. As I have explained, this is never true. You cannot separate the perception and perceiver:

The world is not a fixed solid array of objects out there for it cannot be fully separated from our perception of it. If shifts under our gaze and must be interpreted by us. Whatever fundamental units the world is put together from, they are more delicate, more fragile and more fugitive and startling than we can ever catch in the butterfly net of our senses." -Jacob Bronowski (1981).

Unlike the driver, the observer standing on the roadway knows exactly where to look, what he will find and what is going to happen. He can look directly at the pedestrian for as long a he likes. Perhaps most importantly, he has no expectations, no goals, no purposes, and no competing tasks. The jurors who look at the photographs will similarly have all the same advantages as the observer. They are different viewers from the driver, and they cannot be made to see what the driver would, and yes could, see. Remember Norretranders: "and we are not free to choose." As for the photographs, the argument that they contain "what was there to be seen" misses the point.

With this proper understanding of seeing, here is a very rough outline of a real scientific and expert analysis.

1. Analyze the retinal image and the bottom-up information. For a given scene, this bottom-up sensory information should be roughly the same for all normally-sighted viewers. The peripheral parts of the visual system, retina, photoreceptors and the early visual pathway, etc. are primitive and hard-wired into our species. There is no reason to believe that viewers will differ, except for factors such as aging, visual abnormality and the like. However, there are many relevant variables, such as duration, retinal locus, luminance, adaptation level, etc. Even at this stage, however, top-down cognitive information is important, since it is the prime determinant of where the driver will point his eyes. The applicable science at this stage is "visual psychophysics," a discipline which mixes physics, psychology. and physiology in order to examine how the senses operate. It defines the "boundary conditions" for seeing. If information is below sensory threshold, then the viewer can never see it. Usually, the relevant sensory threshold is for sensing contrast. In cases involving collision, however, the critical sensory threshold is often for motion.

2. Examine how top-down cognitive factors would influence seeing and response. Drivers have 1) a mental model of how the roadway works, 2) a set of schemas, common situations that they have frequently encountered, 3) a collection of scripts, frequently occurring sequences of events and 4) a set of goals that he is trying to achieve. These largely determine what the viewer consciously perceives, how he interprets it, and which response is appropriate. Moreover, a fundamental property of human nature is its ability to adapt and to improve efficiency by making behavior more and more automatic (requiring less attention) through experience. At every opportunity, people switch behavioral control from the conscious and resource-draining processing of attending the light entering the eyes to an automatic and unconscious mental model. In a sense, we develop packaged subroutines that we can trigger and forget because they run off with minimal supervision. In other words, we act primarily on expectations. The central issues are often: How did the situation compare to a normal driver's mental model and schemas? Were any part of the model, I e., major expectations, violated? The applicable science here is cognitive psychology. Lastly, humans have some hard-wired top-down predispositions in perception. These include the tendency to see bright and warm colors as foreground and dark and blue colors as background, Gestalt Pragnanz principles of organization, etc.

3. Determine how the top-down cognitive factors would affect processing of the bottom-up sensory information in the retinal image. Examine the relative influence of the retinal image information vs. the information stored in the head as knowledge and expectations about where to look for task-related information, what is likely there, what is likely to occur, how are other road users likely to act, etc.

Several factors can affect the balance between the bottom-up and the top-down. When viewers are in a familiar situation and/or engaged in a familiar behavior, they rely more on stored knowledge in the mental model. The familiarity may be achieved either directly through experience or vicariously by learning from other people. This same notion is expressed as a contrast between behavioral control by the past and the present:

| Basic Rule 3: Behavior that is automatic and overlearned is more about the past than the present. |

When viewers are in more novel situations for which they do not have stored ready-to-go subroutines of behaviors, then they rely more on the light. The balance can also be influenced by strength of the light entering the eye. A bright flash might engage attention better than a dim one. However, the ability of flashes, bright colors, etc. to control behavior is much less powerful than most people believe (Green, et al. 2008).

The observer who goes to the scene after the fact does none of this. The photographs that he shows the jurors contain none of this. His statements about what could or could not be seen and his photographs are at best useless and at worst misleading.

Conclusion

Seeing is not a passive process of responding to some objective world or even to the retinal image created by that world. It is not a mechanical process where the light entering the eyes determines what the viewer will see. Rather, it is an active process of interpretation that somehow results from a pattern of brain electrical activity created both by the light that enters the eyes and by knowledge stored in the head. An unconscious reasoning process combines the two information processes to produce conscious perception. And we are not free to choose.

The judgment of whether a normal viewer should have seen an object such as a pedestrian must take both bottom-up and top-down factors into account. The perceptions of an observer who views the scene after the fact says very little about what the driver would or could have seen at the time of the accident. Similarly, juror perceptions of scene photographs are likely to be misleading.

Endnote

1 As a psychophysicist, I admit to finding such pronouncements from prominent figures in "real science", i.e., physics, amusing since any psychology student should already know the same thing after the first few lectures of a Perception 101 course.

.

.